激光SLAM之NDT算法(2)-建图

最近的项目里用到了室外激光SLAM进行定位,所以我总结一下项目经验,顺便根据项目最终效果说一下自己对于算法源码中这段代码的理解。

首先,算法框架中用到了不止一种传感器,包括IMU,odometry和GPS等。所有的传感器数据都是为了能构造一个合理且较好的激光里程计。

这段代码很好理解,我大致讲一下流程吧,首先当第一帧点云输入时,我们构造一个位姿向量,x=0,y=0,z=0,旋转角初始化为0,存入map)中,并将其设置为ndt的target参数。当第二针点云进来时,进行点云匹配得到这堆点云的位姿(以lidar为坐标系),由于设置有shift值来判断这帧点云是否距上一帧点云位移了1m,所以我们会根据得到的位姿进行计算,如果小于1m,丢弃,用下一帧点云重复这个过程,如果大于1m,则存入map,并重置它为ndt的target参数,以此类推 ,对下一帧点云重复这个动作。代码如下:

void ndt_mapping::points_callback(const sensor_msgs::PointCloud2::ConstPtr& input)

{

pcl::PointCloud<pcl::PointXYZI> tmp, scan;

pcl::PointCloud<pcl::PointXYZI>::Ptr filtered_scan_ptr(new pcl::PointCloud<pcl::PointXYZI>());

pcl::PointCloud<pcl::PointXYZI>::Ptr transformed_scan_ptr(new pcl::PointCloud<pcl::PointXYZI>());

tf::Quaternion q;

Eigen::Matrix4f t_localizer(Eigen::Matrix4f::Identity());

Eigen::Matrix4f t_base_link(Eigen::Matrix4f::Identity());

//static tf::TransformBroadcaster br;

tf::Transform transform;

pcl::fromROSMsg(*input, tmp);

double r;

Eigen::Vector3d point_pos;

pcl::PointXYZI p;

for (pcl::PointCloud<pcl::PointXYZI>::const_iterator item = tmp.begin(); item != tmp.end(); item++)

{

if(use_imu_){

// deskew(TODO:inplement of predicting pose by imu)

point_pos.x() = (double)item->x;

point_pos.y() = (double)item->y;

point_pos.z() = (double)item->z;

double s = scan_rate_ * (double(item->intensity) - int(item->intensity));

point_pos.x() -= s * current_pose_msg_.pose.position.x;//current_pose_imu_

point_pos.y() -= s * current_pose_msg_.pose.position.y;

point_pos.z() -= s * current_pose_msg_.pose.position.z;

Eigen::Quaterniond start_quat, end_quat, mid_quat;

mid_quat.setIdentity();

end_quat = Eigen::Quaterniond(

current_pose_msg_.pose.orientation.w,

current_pose_msg_.pose.orientation.x,

current_pose_msg_.pose.orientation.y,

current_pose_msg_.pose.orientation.z);

start_quat = mid_quat.slerp(s, end_quat);

point_pos = start_quat.conjugate() * start_quat * point_pos;

point_pos.x() += current_pose_msg_.pose.position.x;

point_pos.y() += current_pose_msg_.pose.position.y;

point_pos.z() += current_pose_msg_.pose.position.z;

p.x = point_pos.x();

p.y = point_pos.y();

p.z = point_pos.z();

}

else{

p.x = (double)item->x;

p.y = (double)item->y;

p.z = (double)item->z;

}

p.intensity = (double)item->intensity;

// minmax

r = sqrt(pow(p.x, 2.0) + pow(p.y, 2.0));

if (min_scan_range_ < r && r < max_scan_range_)

{

scan.push_back(p);

}

}

pcl::PointCloud<pcl::PointXYZI>::Ptr scan_ptr(new pcl::PointCloud<pcl::PointXYZI>(scan));

// Add initial point cloud to velodyne_map

if (initial_scan_loaded == 0)

{

pcl::transformPointCloud(*scan_ptr, *transformed_scan_ptr, tf_btol_);

map_ += *transformed_scan_ptr;

initial_scan_loaded = 1;

}

voxel_grid_filter_.setInputCloud(scan_ptr);

voxel_grid_filter_.filter(*filtered_scan_ptr);

ndt.setInputSource(filtered_scan_ptr);//设置配准的点云

pcl::PointCloud<pcl::PointXYZI>::Ptr map_ptr(new pcl::PointCloud<pcl::PointXYZI>(map_));

if (is_first_map_ == true){

ndt.setInputTarget(map_ptr);//设置点云配准目标

is_first_map_ = false;

}

Eigen::Translation3f init_translation(current_pose_.x, current_pose_.y, current_pose_.z);

Eigen::AngleAxisf init_rotation_x(current_pose_.roll, Eigen::Vector3f::UnitX());

Eigen::AngleAxisf init_rotation_y(current_pose_.pitch, Eigen::Vector3f::UnitY());

Eigen::AngleAxisf init_rotation_z(current_pose_.yaw, Eigen::Vector3f::UnitZ());

Eigen::Matrix4f init_guess =

(init_translation * init_rotation_z * init_rotation_y * init_rotation_x).matrix() * tf_btol_;

pcl::PointCloud<pcl::PointXYZI>::Ptr output_cloud(new pcl::PointCloud<pcl::PointXYZI>);

ndt.align(*output_cloud, init_guess);

t_localizer = ndt.getFinalTransformation();

t_base_link = t_localizer * tf_ltob_;

pcl::transformPointCloud(*scan_ptr, *transformed_scan_ptr, t_localizer);

tf::Matrix3x3 mat_b;

mat_b.setValue(static_cast<double>(t_base_link(0, 0)), static_cast<double>(t_base_link(0, 1)),

static_cast<double>(t_base_link(0, 2)), static_cast<double>(t_base_link(1, 0)),

static_cast<double>(t_base_link(1, 1)), static_cast<double>(t_base_link(1, 2)),

static_cast<double>(t_base_link(2, 0)), static_cast<double>(t_base_link(2, 1)),

static_cast<double>(t_base_link(2, 2)));

// Update current_pose_.

current_pose_.x = t_base_link(0, 3);current_pose_.y = t_base_link(1, 3);current_pose_.z = t_base_link(2, 3);

mat_b.getRPY(current_pose_.roll, current_pose_.pitch, current_pose_.yaw, 1);//mat2rpy

transform.setOrigin(tf::Vector3(current_pose_.x, current_pose_.y, current_pose_.z));

q.setRPY(current_pose_.roll, current_pose_.pitch, current_pose_.yaw); //q from rpy

transform.setRotation(q);//trans from q

br_.sendTransform(tf::StampedTransform(transform, input->header.stamp, "map", "base_link"));

double shift = sqrt(pow(current_pose_.x - previous_pose_.x, 2.0) + pow(current_pose_.y - previous_pose_.y, 2.0));

if (shift >= min_add_scan_shift_)

{

map_ += *transformed_scan_ptr;

previous_pose_.x = current_pose_.x;previous_pose_.y = current_pose_.y;previous_pose_.z = current_pose_.z;

previous_pose_.roll = current_pose_.roll;previous_pose_.pitch = current_pose_.pitch;previous_pose_.yaw = current_pose_.yaw;

ndt.setInputTarget(map_ptr);

sensor_msgs::PointCloud2::Ptr map_msg_ptr(new sensor_msgs::PointCloud2);

pcl::toROSMsg(*map_ptr, *map_msg_ptr);

ndt_map_pub_.publish(*map_msg_ptr);

}

current_pose_msg_.header.frame_id = "map";

current_pose_msg_.header.stamp = input->header.stamp;

current_pose_msg_.pose.position.x = current_pose_.x;current_pose_msg_.pose.position.y = current_pose_.y;current_pose_msg_.pose.position.z = current_pose_.z;

current_pose_msg_.pose.orientation.x = q.x();current_pose_msg_.pose.orientation.y = q.y();current_pose_msg_.pose.orientation.z = q.z();current_pose_msg_.pose.orientation.w = q.w();

current_pose_pub_.publish(current_pose_msg_);

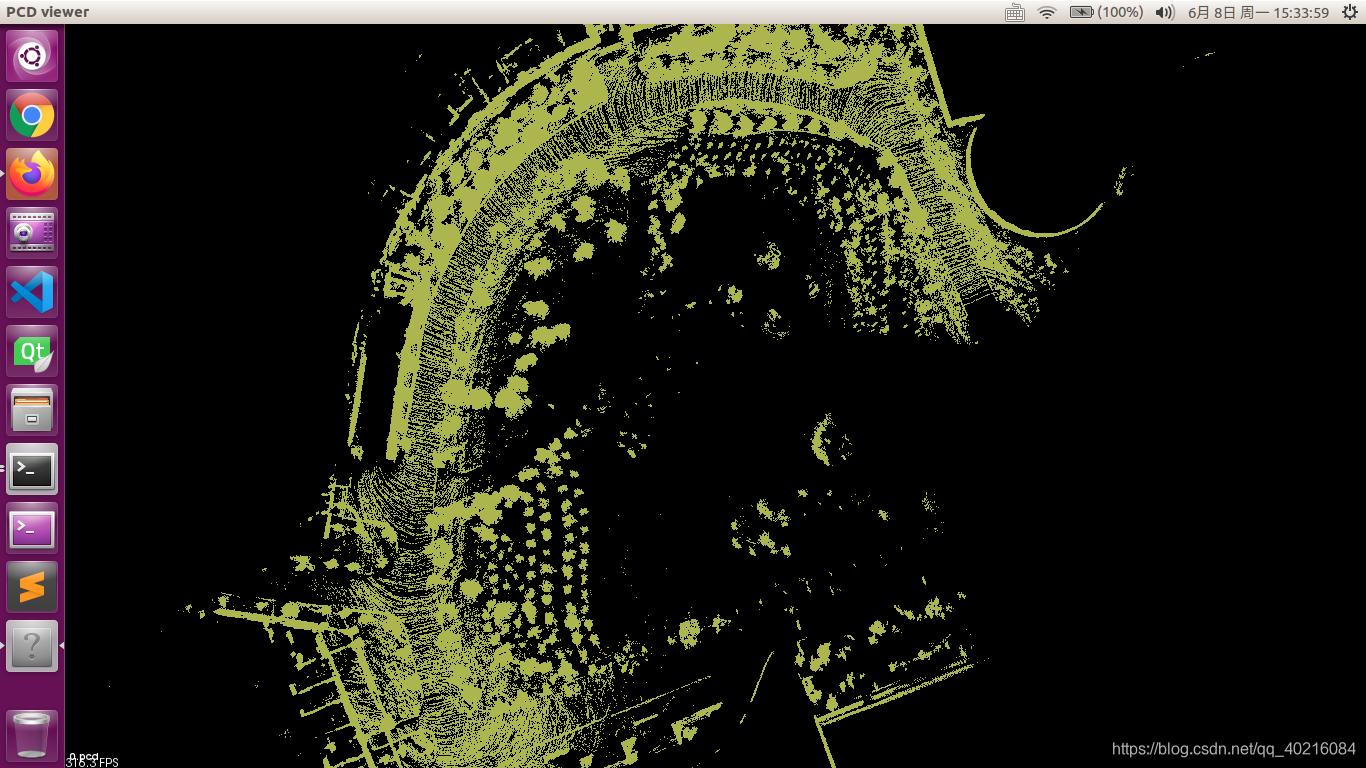

那么,效果如何呢?

这么来看地图构建的还不错,而且场地也很大,但是呢,这个图我并为构建完成,因为在后面的时候,出现了漂移,这个也是可以预期的,毕竟我只用了激光雷达,而且系统中并未有后端设计和回环检测,所以,地图飘是可以预见的

这么来看地图构建的还不错,而且场地也很大,但是呢,这个图我并为构建完成,因为在后面的时候,出现了漂移,这个也是可以预期的,毕竟我只用了激光雷达,而且系统中并未有后端设计和回环检测,所以,地图飘是可以预见的

这段代码可以看出,这中构建激光里程计 方法其实更像是点云拼接。

如果不考虑回环检测和后端优化,我们大可通过加入其他传感器来减小累计误差,注意,我说的是减小,它本身的误差已经很大了,只是相对于只用激光雷达来说,能让有个大致的样子。

另外不得不说的是速度问题,由于我用的是pcl_ndt的,所以速度极慢。建图的准确度对与匹配定位来说,是有一定影响的,所以关于建图这一块,我个人的话,还是需要研究的,如果有新的进度会跟大家分享的,暂时这篇先到这里。