爬取电影天堂电影列表和详情页

import requests

from lxml import etree

base_list_url = 'https://www.dytt8.net'

headers = {

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36"

}

def get_one_page_list(url):

r = requests.get(url, headers=headers)

r.encoding = r.apparent_encoding

text = r.text

html = etree.HTML(text)

url_details = html.xpath("//table[@class='tbspan']//a//@href")

url_details = [base_list_url + url_detail for url_detail in url_details]

return url_details

def parse_info(info, begin):

return info.replace(begin, '').strip()

def parse_detail_page(url):

r = requests.get(url, headers=headers)

r.encoding = r.apparent_encoding

text = r.text

html = etree.HTML(text)

movie = {}

title = html.xpath("//div[@class='title_all']//font/text()")[0]

movie['title'] = title

zoom = html.xpath("//div[@id='Zoom']")[0]

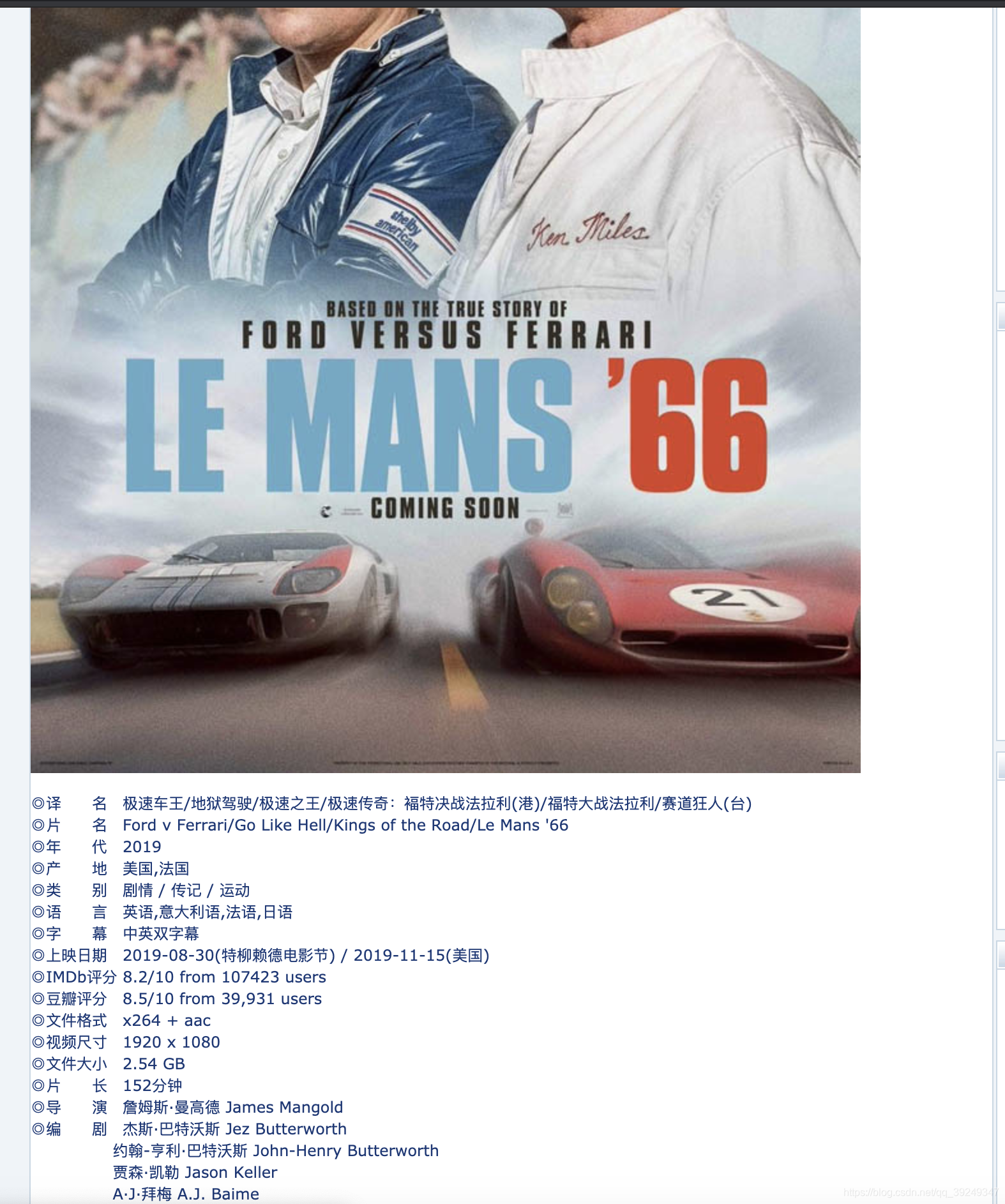

cover = zoom.xpath(".//img//@src")[0] if len(zoom.xpath(".//img//@src")) > 0 else ''

movie['cover'] = cover

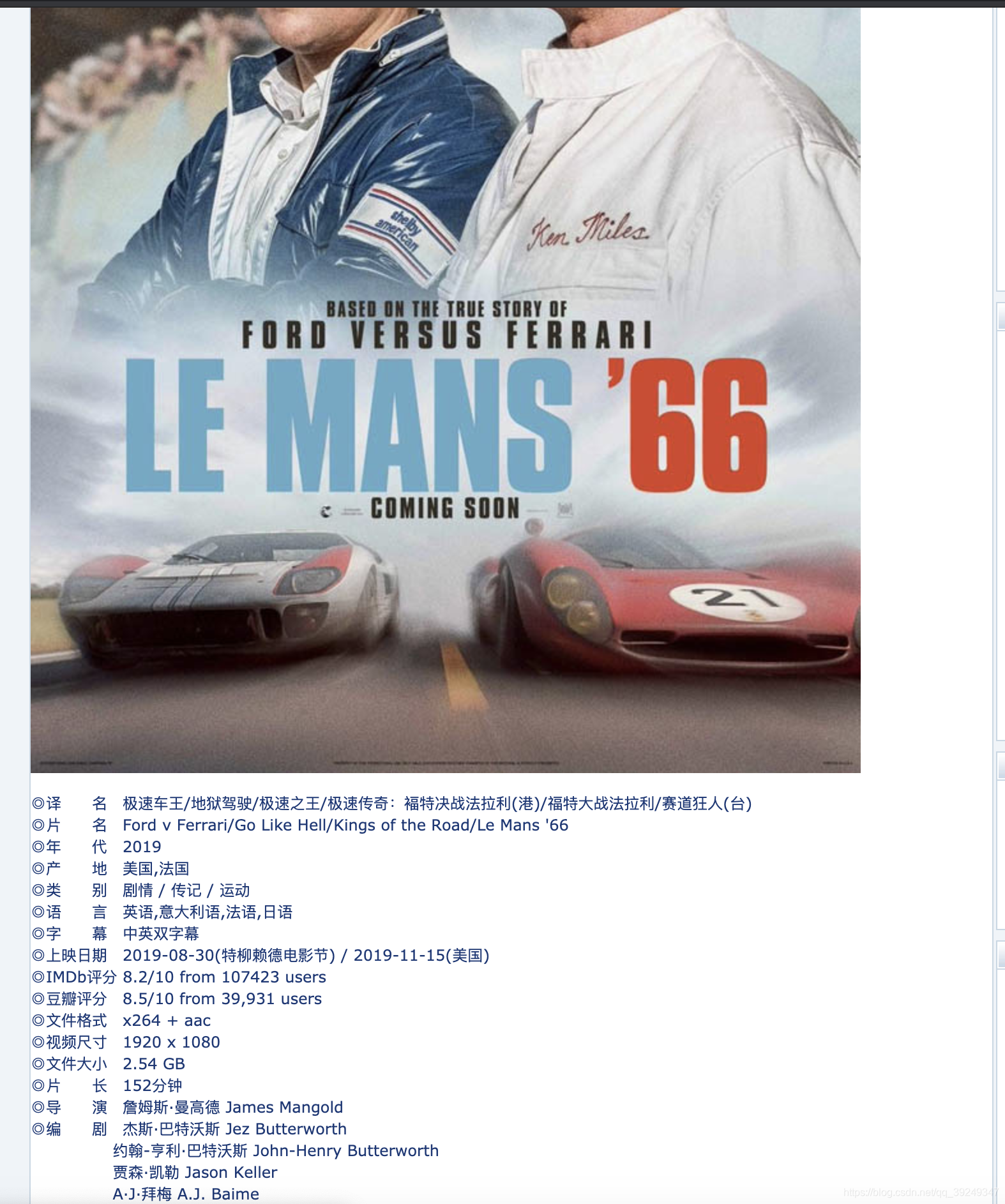

infos = zoom.xpath(".//p//text()")

download_url = zoom.xpath(".//td[@style='WORD-WRAP: break-word']//a/text()")[0]

movie['download_url'] = download_url

for index, info in enumerate(infos):

if info.startswith('◎年 代'):

date = parse_info(info, '◎年 代')

movie['date'] = date

elif info.startswith('◎产 地'):

country = parse_info(info, '◎产 地')

movie['country'] = country

elif info.startswith('◎类 别'):

categories = parse_info(info, '◎类 别')

movie['categories'] = categories

elif info.startswith('◎豆瓣评分'):

score = parse_info(info, '◎豆瓣评分')

movie['score'] = score

elif info.startswith('◎片 长'):

duration = parse_info(info, '◎片 长')

movie['duration'] = duration

elif info.startswith('◎主 演'):

actor = parse_info(info, '◎主 演')

actors = []

actors.append(actor)

for i in range(index + 1, len(infos) - 1):

if infos[i].startswith('◎标 签'):

break

actors.append(infos[i])

movie['actors'] = actors

elif info.startswith('◎简 介'):

intro = infos[index + 1]

movie['intro'] = intro

return movie

def main():

url = "https://www.dytt8.net/html/gndy/dyzz/list_23_{}.html"

movies = []

for i in range(1, 8):

url = url.format(i)

url_one_page_lists = get_one_page_list(url)

for detail_url in url_one_page_lists:

try:

movie = parse_detail_page(detail_url)

movies.append(movie)

print(movie)

except():

continue

main()