docker swarm和docker service

简介

Docker Swarm 是一套管理 Docker 集群的工具,它将一群 Docker 宿主机变成一个单一的、虚拟的主机。Swarm 使用标准的 Docker API 作为其前端访问入口,换言之,各种形式的 Docker 工具 (如 Compose Krane Deis docker-py Docker 本身等)都可以很容易地与 Swarm 进行集成。

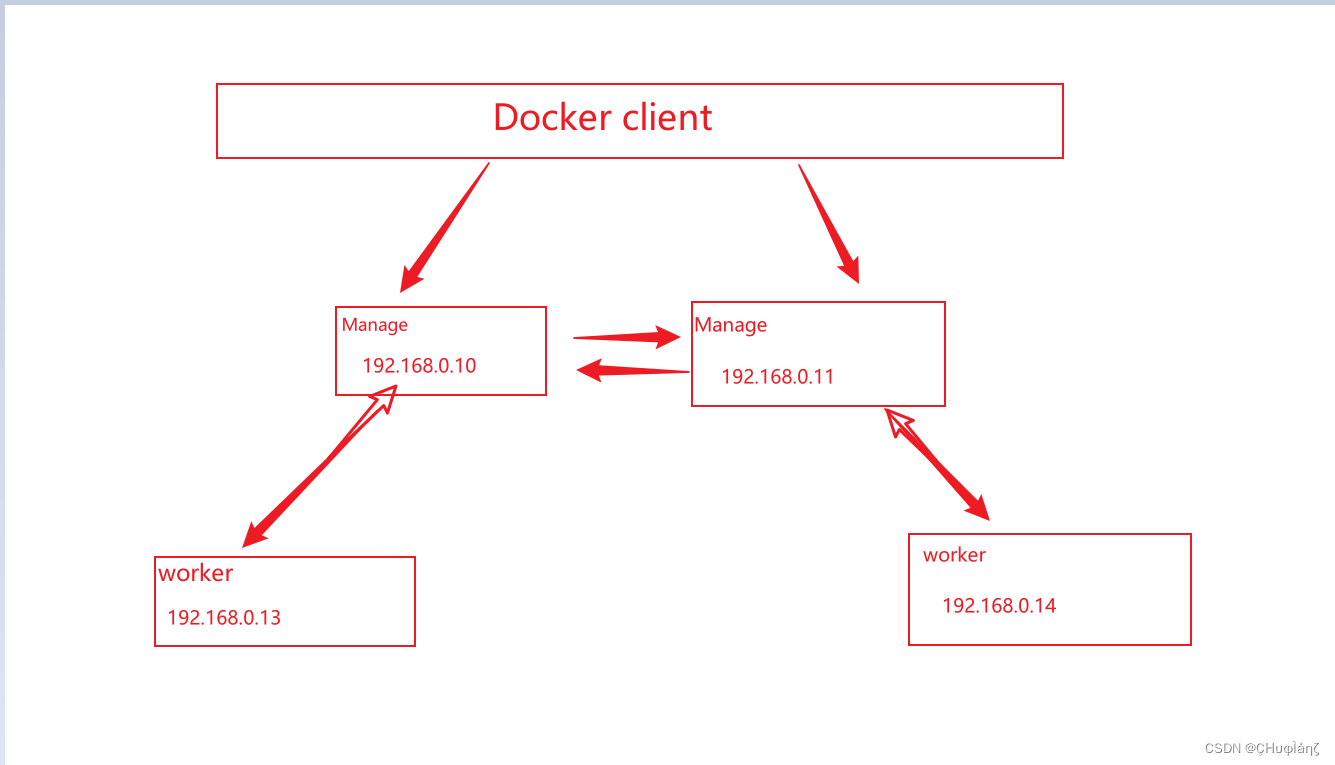

使用 Swarm 管理 Docker 集群时,会有一个 swarm manager 及若干的 swarm node, swarm manager 上运行 swarm daemon ,用户只需要与 swarm manager 通信即可,然后 swarm manager 根据 discovery service 的信息选择一个 swarm node 来运行container。

在这里插入图片描述注意:swarm daemon 只是 个任务调度器它本身不运行容器,它只接收 Docker client 发送过来的请求,调度合适的 swarm node运行 container ,这意昧着,即使 swarm daemon 由于某些原因挂掉了,已经运行起来的容器也不会有任何影响

Raft一致性算法

保证节点存活的一个协议,后面会讲到!

Docker Swarm 特点

- Swarm 对外以 Docker API 接口呈现,这样带来的好处是,如果现有系统使用Docker Engine ,则可以平滑地将 Docker Engine 切到 Swarm 上,无须改动现有系统

- Swarm 对用户来说,之前使用 Docker 的经验可以继承过来,非常容易上手,学习成本和二次开发成本都比较低 ,同时, Swarm 本身专注于 Docker 集群管理,非常轻量,占用资源也非常少 ,Batteries included but swappable ,简单来说,就是插件化机制,Swarm中的各个模块都抽象出了 API ,可以根据自己的特点进行定制实现

- Swarm 身对 Docker 命令参数支持得比较完善, Swarm 目前与 Docker 是同步发

布的 Docker 的新功能都会第一时间在 Swarm 中体现

搭建集群

环境准备:

docker1:192.168.0.10

docker2:192.168.0.11

docker3:192.168.0.12

docker4:192.168.0.13

- 这里是双主双从,一般是多主多从!!!

帮助命令

帮助命令

[root@localhost ~]# docker swarm --help

Usage: docker swarm COMMAND

Manage Swarm

Commands:

ca Display and rotate the root CA

init Initialize a swarm

join Join a swarm as a node and/or manager

join-token Manage join tokens

leave Leave the swarm

unlock Unlock swarm

unlock-key Manage the unlock key

update Update the swarm

Run 'docker swarm COMMAND --help' for more information on a command.

地址分为公网和私网

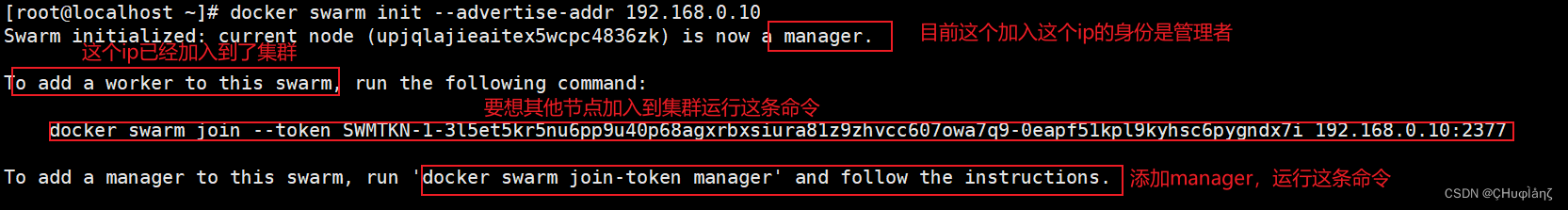

让docker1成为Manager

[root@localhost ~]# docker swarm init --advertise-addr 192.168.0.10

Swarm initialized: current node (upjqlajieaitex5wcpc4836zk) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-3l5et5kr5nu6pp9u40p68agxrbxsiura81z9zhvcc607owa7q9-0eapf51kpl9kyhsc6pygndx7i 192.168.0.10:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

- 初始化节点

docker swarm init - 加入一个节点 docker join

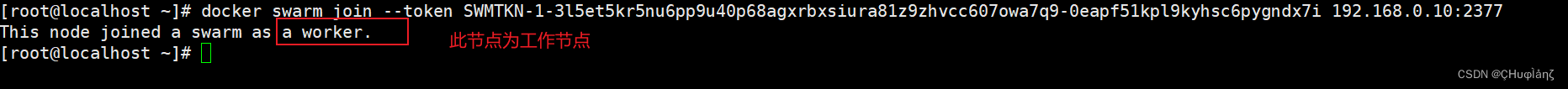

把docker3加入到集群

docker swarm join --token SWMTKN-1-3l5et5kr5nu6pp9u40p68agxrbxsiura81z9zhvcc607owa7q9-0eapf51kpl9kyhsc6pygndx7i 192.168.0.10:2377

[root@localhost ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

ptq9lo57omx4njggpx6802963 localhost.localdomain Ready Active 20.10.14

upjqlajieaitex5wcpc4836zk * localhost.localdomain Ready Active Leader 20.10.14

生成一个令牌,把docekr4也加入到群集中

docker swarm join-token worker

[root@localhost ~]# docker swarm join-token worker

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-3l5et5kr5nu6pp9u40p68agxrbxsiura81z9zhvcc607owa7q9-0eapf51kpl9kyhsc6pygndx7i 192.168.0.10:2377

[root@localhost docker]# date

Tue May 3 09:16:37 EDT 2022

[root@localhost docker]# docker swarm join --token SWMTKN-1-3l5et5kr5nu6pp9u40p68agxrbxsiura81z9zhvcc607owa7q9-0eapf51kpl9kyhsc6pygndx7i 192.168.0.10:2377

This node joined a swarm as a worker.

查看节点信息

[root@localhost ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

ptq9lo57omx4njggpx6802963 localhost.localdomain Ready Active 20.10.14

ravewembyrqlkzzhvtiv5bu4p localhost.localdomain Ready Active 20.10.14

upjqlajieaitex5wcpc4836zk * localhost.localdomain Ready Active Leader 20.10.14

badock2 加入到集群并且成为主节点(manager)

- 生成令牌

[root@localhost ~]# docker swarm join-token manager

To add a manager to this swarm, run the following command:

docker swarm join --token SWMTKN-1-3l5et5kr5nu6pp9u40p68agxrbxsiura81z9zhvcc607owa7q9-4yq8p8z0995nr5t13w4hp45lw 192.168.0.10:2377

- 加入

[root@localhost docker]# docker swarm join --token SWMTKN-1-3l5et5kr5nu6pp9u40p68agxrbxsiura81z9zhvcc607owa7q9-4yq8p8z0995nr5t13w4hp45lw 192.168.0.10:2377

This node joined a swarm as a manager.

- 查看

[root@localhost ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

5c9polhcz9xq672qk05cwqy6p localhost.localdomain Ready Active Reachable 20.10.14

ptq9lo57omx4njggpx6802963 localhost.localdomain Ready Active 20.10.14

ravewembyrqlkzzhvtiv5bu4p localhost.localdomain Ready Active 20.10.14

upjqlajieaitex5wcpc4836zk * localhost.localdomain Ready Active Leader 20.10.14

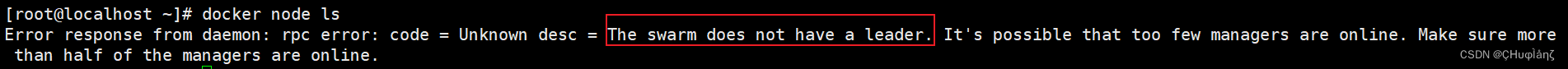

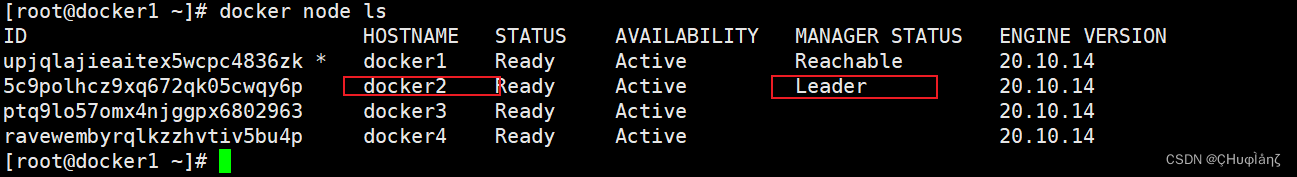

Raft协议

双主双从: 假设一个主节点挂了,另外一个主节点也会挂掉

Raft协议:保证大多数节点存活才可以用,只要大于1,集群至少大于2****来达到高可用(HA)

假设docker1挂掉,宕机,另外一个主节点不可以使用

## 停用docker1

[root@localhost ~]# systemctl stop docker

Warning: Stopping docker.service, but it can still be activated by:

docker.socket

## 查看在docker2上的群集是否可用(发现会报错!)

[root@localhost ~]# docker node ls

Error response from daemon: rpc error: code = Unknown desc = The swarm does not have a leader. It's possible that too few managers are online. Make sure more than half of the managers are online.

docker1重新启动,发现docker1不在是Leader

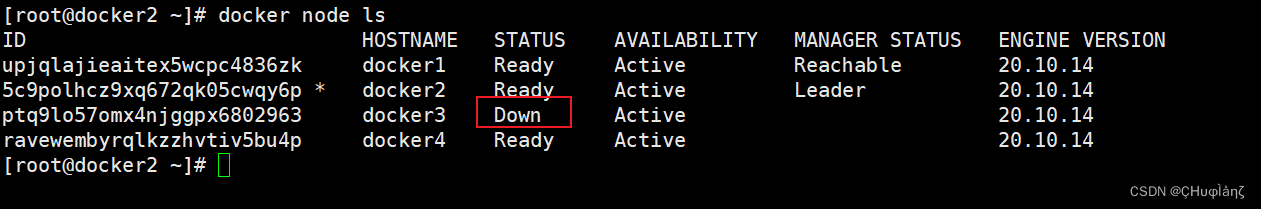

docker3离开集群

[root@docker3 ~]# docker swarm leave

Node left the swarm.

三主节点(docker3为manager)

[root@docker2 ~]# docker swarm join-token manager

To add a manager to this swarm, run the following command:

docker swarm join --token SWMTKN-1-3l5et5kr5nu6pp9u40p68agxrbxsiura81z9zhvcc607owa7q9-4yq8p8z0995nr5t13w4hp45lw 192.168.0.11:2377

[root@docker3 ~]# docker swarm join --token SWMTKN-1-3l5et5kr5nu6pp9u40p68agxrbxsiura81z9zhvcc607owa7q9-4yq8p8z0995nr5t13w4hp45lw 192.168.0.11:2377

This node joined a swarm as a manager.

停止docker2(manager)

目前有三个主节点,停掉一个,集群不会挂掉!!

[root@docker2 ~]# systemctl stop docker

Warning: Stopping docker.service, but it can still be activated by:

docker.socket

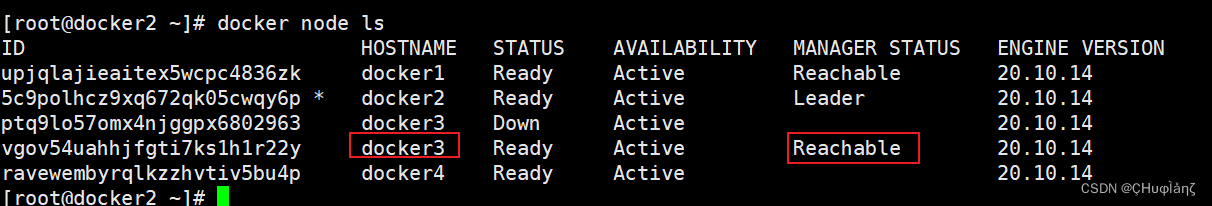

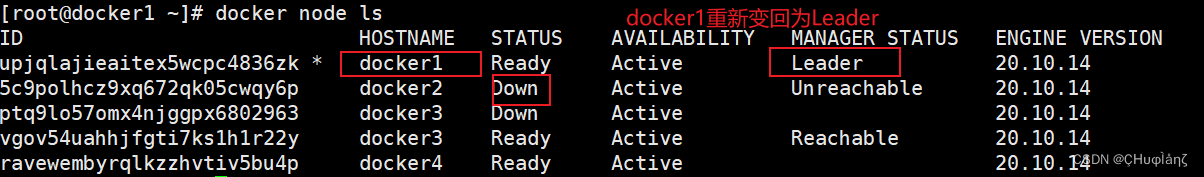

docker3(manager)离开集群

[root@docker3 ~]# docker swarm leave --force

Node left the swarm.

[root@docker1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

upjqlajieaitex5wcpc4836zk * docker1 Ready Active Leader 20.10.14

5c9polhcz9xq672qk05cwqy6p docker2 Ready Active Reachable 20.10.14

ptq9lo57omx4njggpx6802963 docker3 Down Active 20.10.14

vgov54uahhjfgti7ks1h1r22y docker3 Down Active Unreachable 20.10.14

ravewembyrqlkzzhvtiv5bu4p docker4 Ready Active 20.10.14

解散集群

- 排空集群上的所有的节点容器(docker node update --availability drain g36lvv23ypjd8v7ovlst2n3yt)

- 把所有的集群节点先退出(docker swarm leave)

- 删除指定节点(docker node rm g36lvv23ypjd8v7ovlst2n3yt)

- 所有的manager退出(dockerswarm leave --force)

docker 服务(弹性创建)

集群: swarm docker sevice

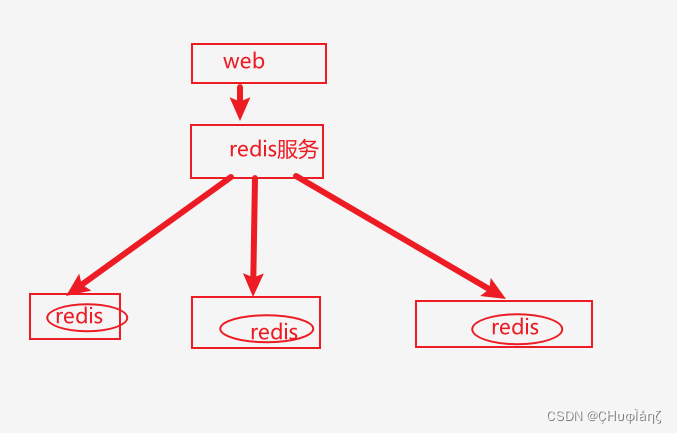

加入有一个web服务。当客户访问可以通过redis服务随机分配的到其他的redis的主机上

动态扩缩容

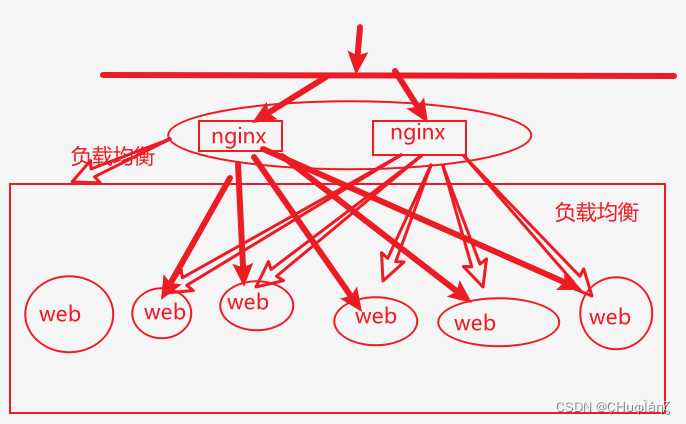

如下图:如果增加了一个新的web服务,又要配置nginx,如果把全部的web放到一个集装箱中,service服务,nginx直接访问service就好了,这样节省了重复配置!

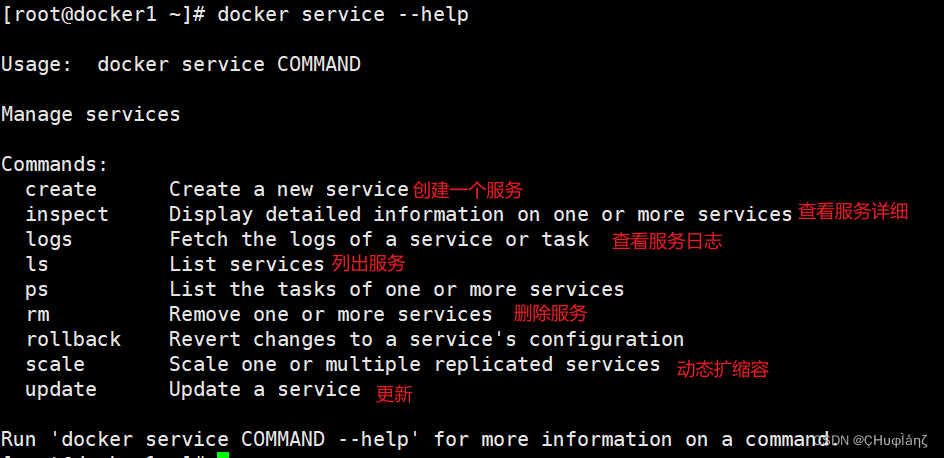

docker-server帮助信息

帮助命令

创建服务

docker service 与docker run差不多!!

- docker run ## 容器启动,不具有扩缩容功能

- docker service 服务 ##具有扩缩容,灰度发布,金雀式发布

[root@docker1 ~]# docker service create -p 8888:80 --name nginx nginx

fbuiguhm0cy1gykdc7ujkt6kc

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged

[root@docker1 ~]# docker service ps nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

rm2gqp5ive35 nginx.1 nginx:latest docker4 Running Running 2 minutes ago

查看服务 (副本replicated)

这里只有一个副本

[root@docker1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

fbuiguhm0cy1 nginx replicated 1/1 nginx:latest *:8888->80/tcp

可以看到副本在docker4上运行起来

[root@docker4 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9b869571d1ed nginx:latest "/docker-entrypoint.…" 5 minutes ago Up 4 minutes 80/tcp nginx.1.rm2gqp5ive35g86fya36pg32y

更新service帮助信息

[root@docker1 ~]# docker service update --help

Usage: docker service update [OPTIONS] SERVICE

Update a service

Options:

--args command Service command args

--cap-add list Add Linux capabilities

--cap-drop list Drop Linux capabilities

--config-add config Add or update a config file on a service

--config-rm list Remove a configuration file

--constraint-add list Add or update a placement constraint

--constraint-rm list Remove a constraint

--container-label-add list Add or update a container label

--container-label-rm list Remove a container label by its key

--credential-spec credential-spec Credential spec for managed service account (Windows only)

-d, --detach Exit immediately instead of waiting for the service to converge

--dns-add list Add or update a custom DNS server

--dns-option-add list Add or update a DNS option

--dns-option-rm list Remove a DNS option

--dns-rm list Remove a custom DNS server

--dns-search-add list Add or update a custom DNS search domain

--dns-search-rm list Remove a DNS search domain

--endpoint-mode string Endpoint mode (vip or dnsrr)

--entrypoint command Overwrite the default ENTRYPOINT of the image

--env-add list Add or update an environment variable

--env-rm list Remove an environment variable

--force Force update even if no changes require it

--generic-resource-add list Add a Generic resource

--generic-resource-rm list Remove a Generic resource

--group-add list Add an additional supplementary user group to the container

--group-rm list Remove a previously added supplementary user group from the container

--health-cmd string Command to run to check health

--health-interval duration Time between running the check (ms|s|m|h)

--health-retries int Consecutive failures needed to report unhealthy

--health-start-period duration Start period for the container to initialize before counting retries towards unstable (ms|s|m|h)

--health-timeout duration Maximum time to allow one check to run (ms|s|m|h)

--host-add list Add a custom host-to-IP mapping (host:ip)

--host-rm list Remove a custom host-to-IP mapping (host:ip)

--hostname string Container hostname

--image string Service image tag

--init Use an init inside each service container to forward signals and reap processes

--isolation string Service container isolation mode

--label-add list Add or update a service label

--label-rm list Remove a label by its key

--limit-cpu decimal Limit CPUs

--limit-memory bytes Limit Memory

--limit-pids int Limit maximum number of processes (default 0 = unlimited)

--log-driver string Logging driver for service

--log-opt list Logging driver options

--max-concurrent uint Number of job tasks to run concurrently (default equal to --replicas)

--mount-add mount Add or update a mount on a service

--mount-rm list Remove a mount by its target path

--network-add network Add a network

--network-rm list Remove a network

--no-healthcheck Disable any container-specified HEALTHCHECK

--no-resolve-image Do not query the registry to resolve image digest and supported platforms

--placement-pref-add pref Add a placement preference

--placement-pref-rm pref Remove a placement preference

--publish-add port Add or update a published port

--publish-rm port Remove a published port by its target port

-q, --quiet Suppress progress output

--read-only Mount the container's root filesystem as read only

--replicas uint Number of tasks

--replicas-max-per-node uint Maximum number of tasks per node (default 0 = unlimited)

--reserve-cpu decimal Reserve CPUs

--reserve-memory bytes Reserve Memory

--restart-condition string Restart when condition is met ("none"|"on-failure"|"any")

--restart-delay duration Delay between restart attempts (ns|us|ms|s|m|h)

--restart-max-attempts uint Maximum number of restarts before giving up

--restart-window duration Window used to evaluate the restart policy (ns|us|ms|s|m|h)

--rollback Rollback to previous specification

--rollback-delay duration Delay between task rollbacks (ns|us|ms|s|m|h)

--rollback-failure-action string Action on rollback failure ("pause"|"continue")

--rollback-max-failure-ratio float Failure rate to tolerate during a rollback

--rollback-monitor duration Duration after each task rollback to monitor for failure (ns|us|ms|s|m|h)

--rollback-order string Rollback order ("start-first"|"stop-first")

--rollback-parallelism uint Maximum number of tasks rolled back simultaneously (0 to roll back all at once)

--secret-add secret Add or update a secret on a service

--secret-rm list Remove a secret

--stop-grace-period duration Time to wait before force killing a container (ns|us|ms|s|m|h)

--stop-signal string Signal to stop the container

--sysctl-add list Add or update a Sysctl option

--sysctl-rm list Remove a Sysctl option

-t, --tty Allocate a pseudo-TTY

--ulimit-add ulimit Add or update a ulimit option (default [])

--ulimit-rm list Remove a ulimit option

--update-delay duration Delay between updates (ns|us|ms|s|m|h)

--update-failure-action string Action on update failure ("pause"|"continue"|"rollback")

--update-max-failure-ratio float Failure rate to tolerate during an update

--update-monitor duration Duration after each task update to monitor for failure (ns|us|ms|s|m|h)

--update-order string Update order ("start-first"|"stop-first")

--update-parallelism uint Maximum number of tasks updated simultaneously (0 to update all at once)

-u, --user string Username or UID (format: <name|uid>[:<group|gid>])

--with-registry-auth Send registry authentication details to swarm agents

-w, --workdir string Working directory inside the container

增加副本(动态扩缩容)

加入访问流量过大的时候,我们就需要缓解服务器的压力,提高性能,我们就需要增加更多的副本!!

这里可以看到增加的副本后,nginx分别运行在docker1,docker2,docker4上面

[root@docker1 ~]# docker service update --replicas 3 nginx

nginx

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged

[root@docker1 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d6d208ed0654 nginx:latest "/docker-entrypoint.…" 36 seconds ago Up 33 seconds 80/tcp nginx.3.tv86k9fw82vtvdknvw5smgimc

[root@docker2 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

b6b94f4dc0d9 nginx:latest "/docker-entrypoint.…" 22 seconds ago Up 20 seconds 80/tcp nginx.2.ugkff9zoq5k1icg40wq2eklpf

[root@docker4 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9b869571d1ed nginx:latest "/docker-entrypoint.…" 12 minutes ago Up 12 minutes 80/tcp nginx.1.rm2gqp5ive35g86fya36pg32y

这个服务:集群中任何节点都可以访问,服务可以由多个副本动态扩缩实现!

回滚到一个副本

[root@docker1 ~]# docker service update --replicas 1 nginx

nginx

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged

[root@docker4 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9b869571d1ed nginx:latest "/docker-entrypoint.…" 20 minutes ago Up 20 minutes 80/tcp nginx.1.rm2gqp5ive35g86fya36pg32y

另外一种动态扩缩容(docker service scale)

与上面的docker service update --replicas 差不多,没多大区别!

[root@docker1 ~]# docker service ps nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

rm2gqp5ive35 nginx.1 nginx:latest docker4 Running Running 23 minutes ago

[root@docker1 ~]# docker service scale --help

Usage: docker service scale SERVICE=REPLICAS [SERVICE=REPLICAS...]

Scale one or multiple replicated services

Options:

-d, --detach Exit immediately instead of waiting for the service to converge

[root@docker1 ~]# docker service scale nginx=5

nginx scaled to 5

overall progress: 5 out of 5 tasks

1/5: running [==================================================>]

2/5: running [==================================================>]

3/5: running [==================================================>]

4/5: running [==================================================>]

5/5: running [==================================================>]

verify: Service converged

服务的删除(docker service rm)

[root@docker1 ~]# docker service rm nginx

nginx

[root@docker1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS