Hadoop大数据应用:NFS网关 连接 HDFS集群

目录

一、实验

1.环境

(1)主机

表1 主机

| 主机 | 架构 | 软件 | 版本 | IP | 备注 |

| hadoop | NameNode (已部署) SecondaryNameNode (已部署) ResourceManager(已部署) | hadoop | 2.7.7 | 192.168.204.50 | |

| node01 | DataNode(已部署) NodeManager(已部署) | hadoop | 2.7.7 | 192.168.204.51 | |

| node02 | DataNode(已部署) NodeManager(已部署) | hadoop | 2.7.7 | 192.168.204.52 | |

| node03 | DataNode(已部署) NodeManager(已部署) | hadoop | 2.7.7 | 192.168.204.53 | |

| nfsgateway | Portmap Nfs3 | hadoop | 2.7.7 | 192.168.204.56 | |

| node04 | nfs-utils | 192.168.204.54 | nfs客户端 | ||

| node05 | nfs-utils | 192.168.204.55 | nfs客户端 |

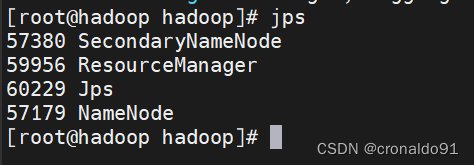

(2)查看jps

hadoop节点

[root@hadoop hadoop]# jps

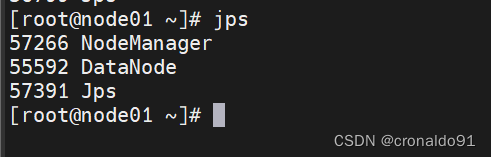

node01节点

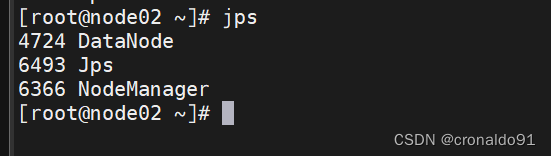

node02节点

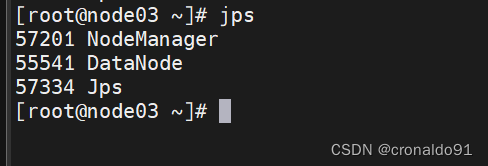

node03节点

2.NFS网关 连接 HDFS集群

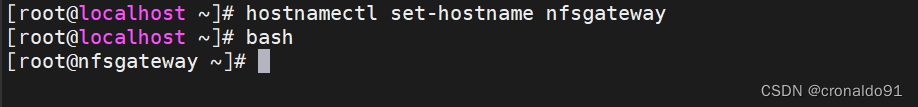

(1) 修改主机名

[root@localhost ~]# hostnamectl set-hostname nfsgateway

[root@localhost ~]# bash

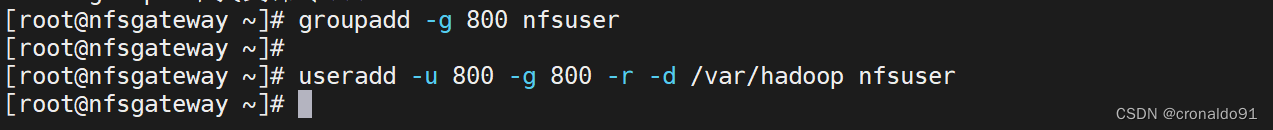

(2)配置代理用户

nfsgateway节点

[root@nfsgateway ~]# groupadd -g 800 nfsuser

[root@nfsgateway ~]# useradd -u 800 -g 800 -r -d /var/hadoop nfsuser

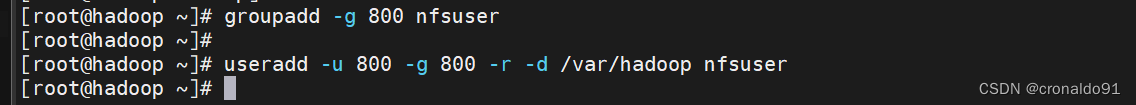

hadoop节点

[root@hadoop ~]# groupadd -g 800 nfsuser

[root@hadoop ~]# useradd -u 800 -g 800 -r -d /var/hadoop nfsuser

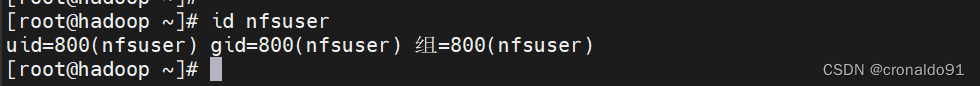

(3)查看用户id

[root@hadoop ~]# id nfsuser

(4)代理用户授权

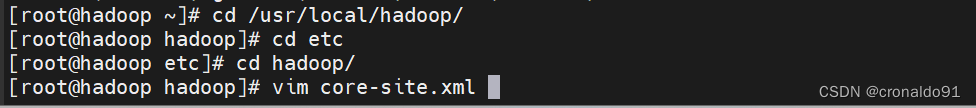

[root@hadoop ~]# cd /usr/local/hadoop/

[root@hadoop hadoop]# cd etc

[root@hadoop etc]# cd hadoop/

[root@hadoop hadoop]# vim core-site.xml

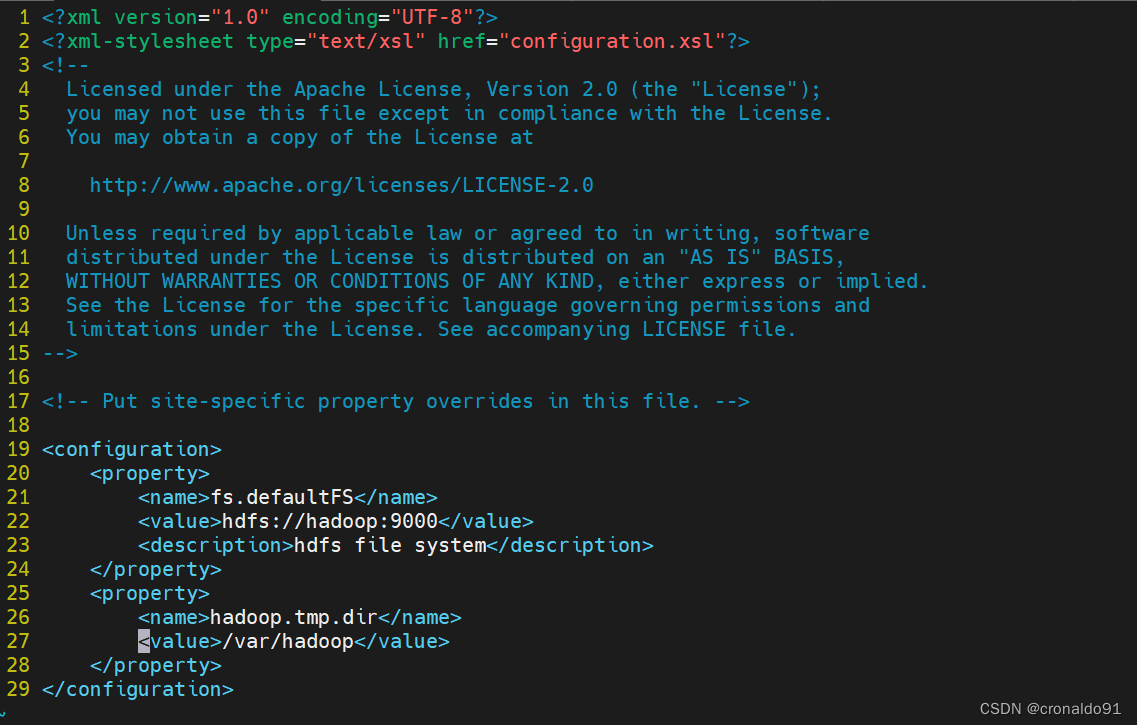

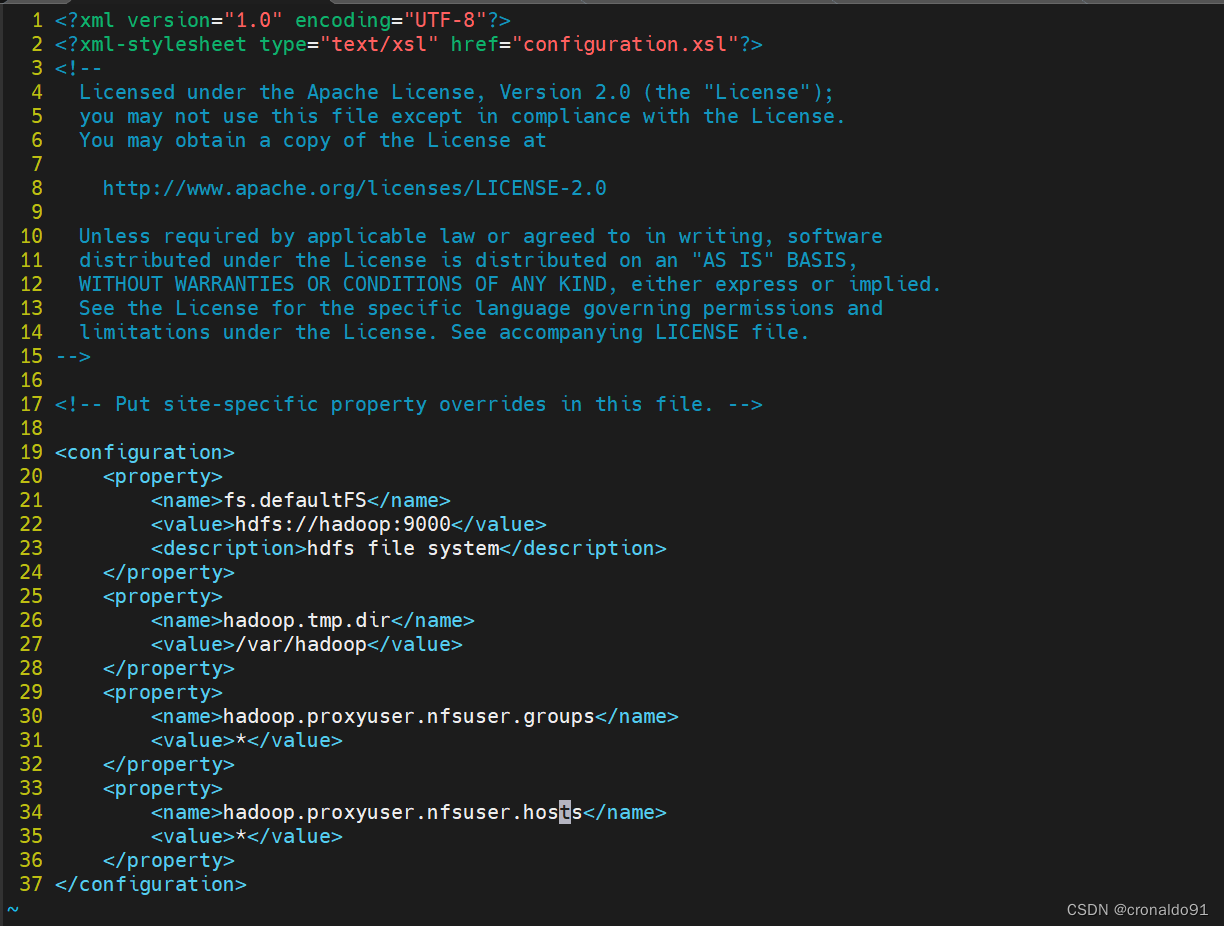

修改前:

修改后:

<property>

<name>hadoop.proxyuser.nfsuser.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.nfsuser.hosts</name>

<value>*</value>

</property>

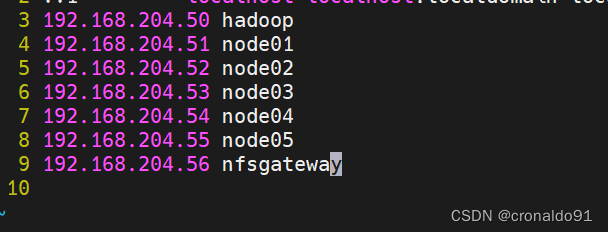

(5)修改主机域名

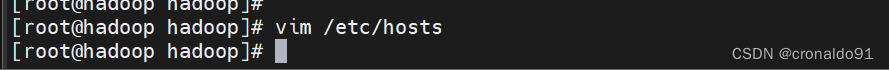

[root@hadoop hadoop]# vim /etc/hosts

……

192.168.204.56 nfsgateway

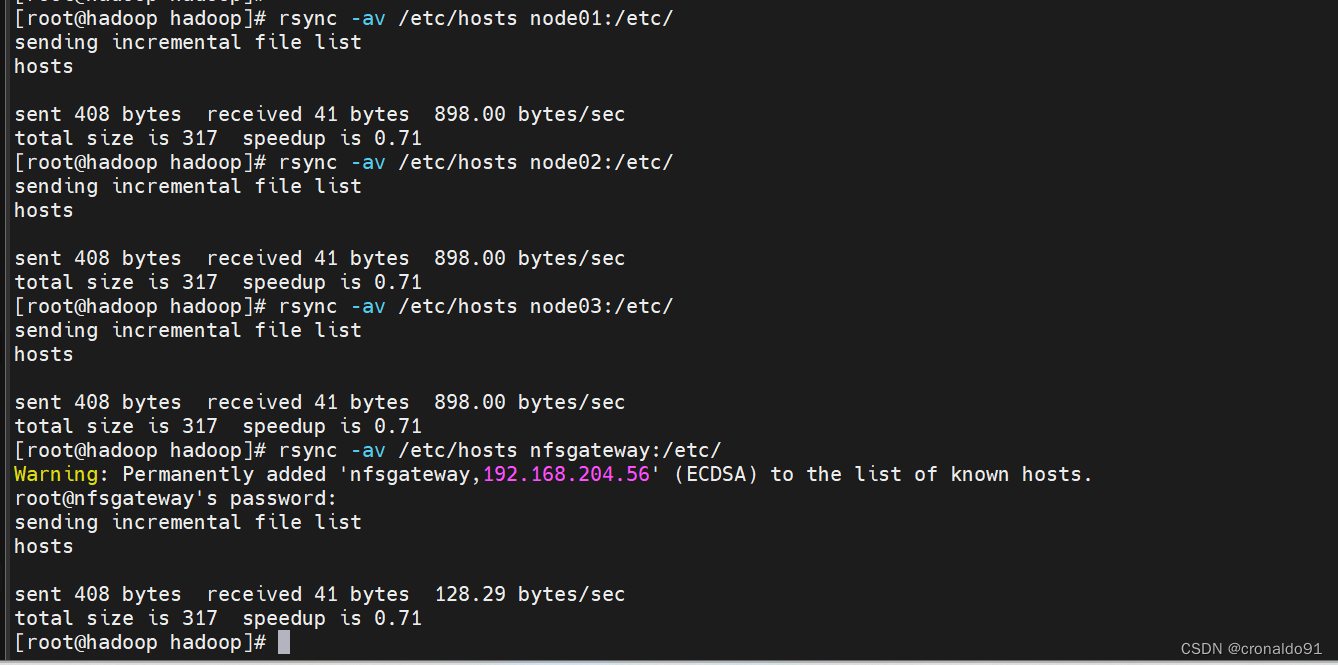

(6)同步域名

[root@hadoop hadoop]# rsync -av /etc/hosts node01:/etc/

sending incremental file list

hosts

sent 408 bytes received 41 bytes 898.00 bytes/sec

total size is 317 speedup is 0.71

[root@hadoop hadoop]# rsync -av /etc/hosts node02:/etc/

sending incremental file list

hosts

sent 408 bytes received 41 bytes 898.00 bytes/sec

total size is 317 speedup is 0.71

[root@hadoop hadoop]# rsync -av /etc/hosts node03:/etc/

sending incremental file list

hosts

sent 408 bytes received 41 bytes 898.00 bytes/sec

total size is 317 speedup is 0.71

[root@hadoop hadoop]# rsync -av /etc/hosts nfsgateway:/etc/

Warning: Permanently added 'nfsgateway,192.168.204.56' (ECDSA) to the list of known hosts.

root@nfsgateway's password:

sending incremental file list

hosts

sent 408 bytes received 41 bytes 128.29 bytes/sec

total size is 317 speedup is 0.71

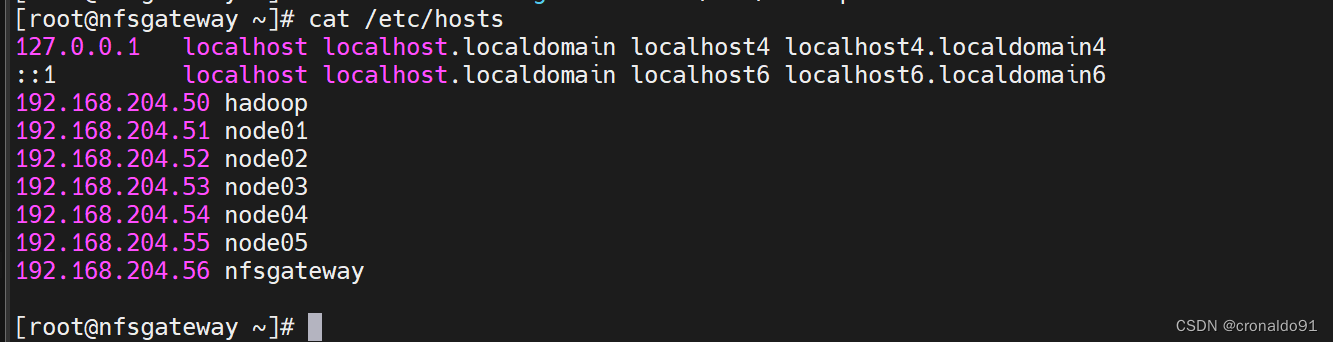

(7)查看 (nfsgateway节点)

[root@nfsgateway ~]# cat /etc/hosts

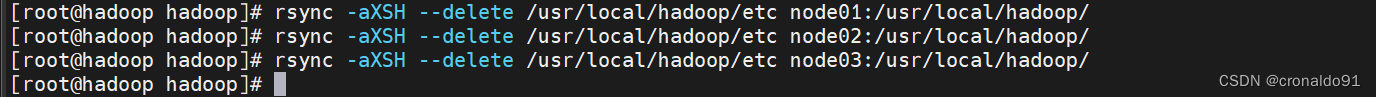

(8)同步Hadoop配置 (hadoop节点)

[root@hadoop hadoop]# rsync -aXSH --delete /usr/local/hadoop/etc node01:/usr/local/hadoop/

[root@hadoop hadoop]# rsync -aXSH --delete /usr/local/hadoop/etc node02:/usr/local/hadoop/

[root@hadoop hadoop]# rsync -aXSH --delete /usr/local/hadoop/etc node03:/usr/local/hadoop/

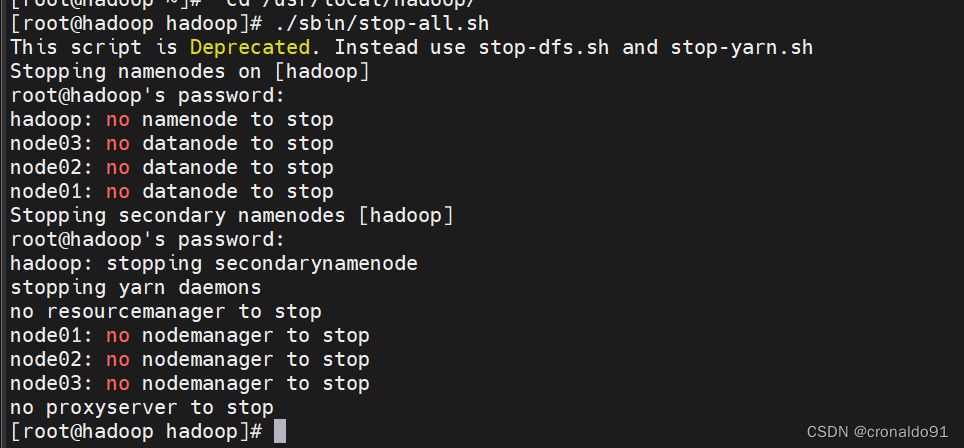

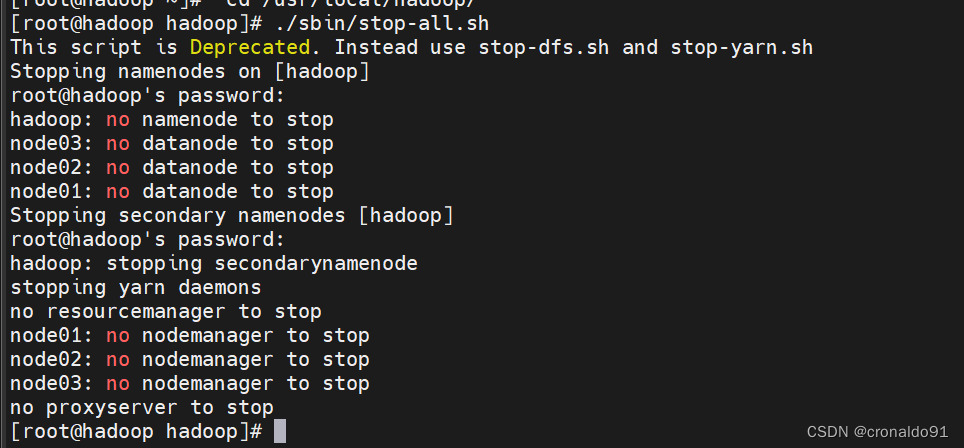

(9)停止服务

[root@hadoop hadoop]# ./sbin/stop-all.sh

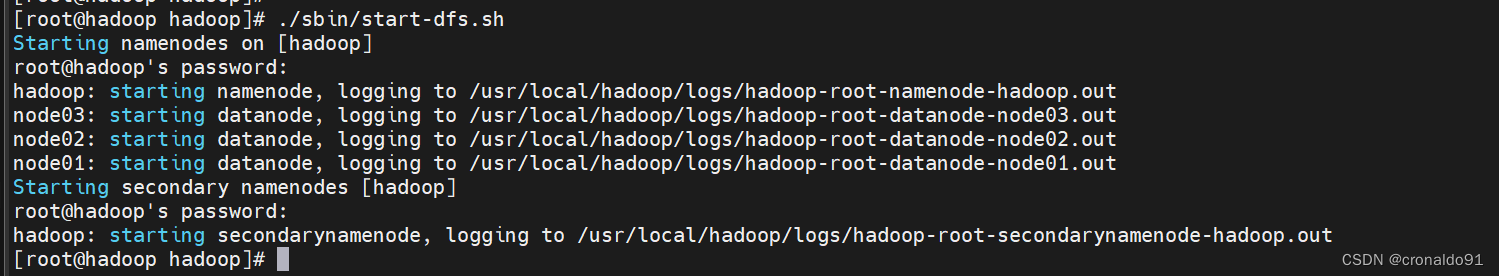

(10)启动服务

[root@hadoop hadoop]# ./sbin/start-dfs.sh

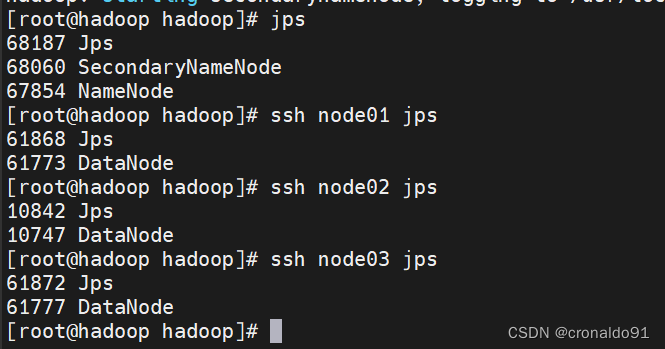

(12)查看jps

[root@hadoop hadoop]# jps

68187 Jps

68060 SecondaryNameNode

67854 NameNode

[root@hadoop hadoop]# ssh node01 jps

61868 Jps

61773 DataNode

[root@hadoop hadoop]# ssh node02 jps

10842 Jps

10747 DataNode

[root@hadoop hadoop]# ssh node03 jps

61872 Jps

61777 DataNode

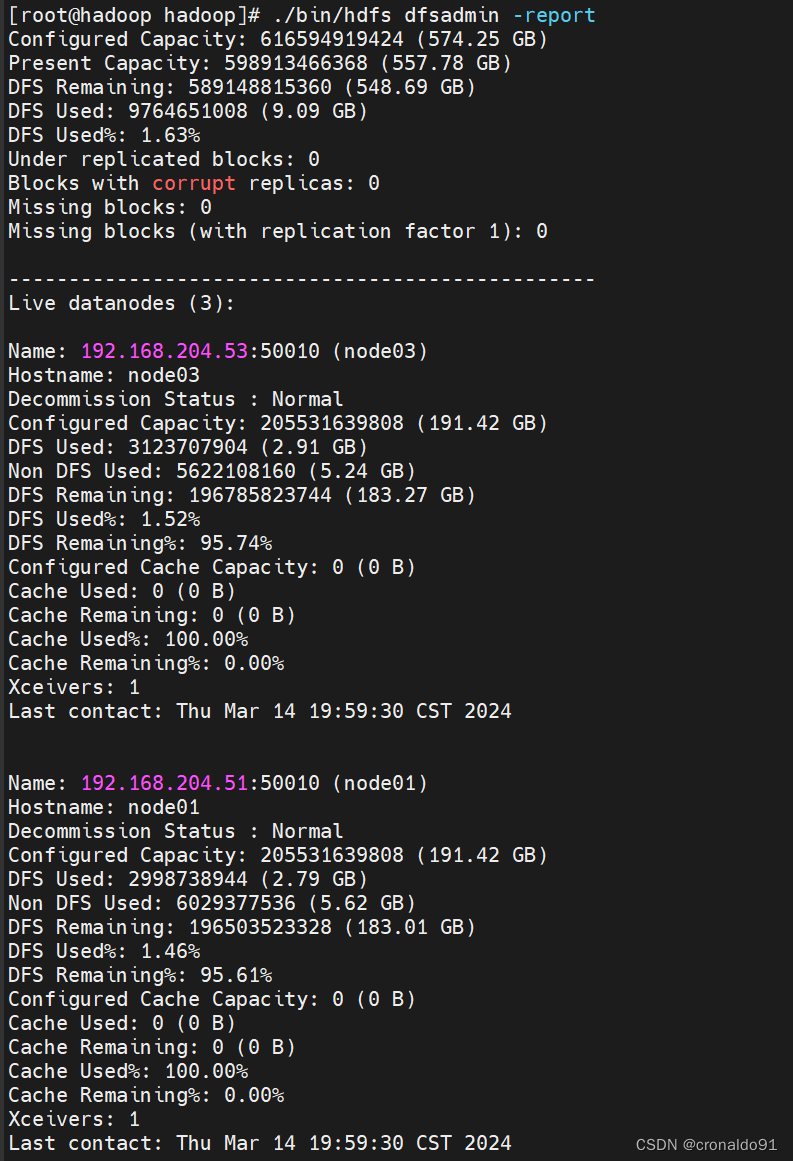

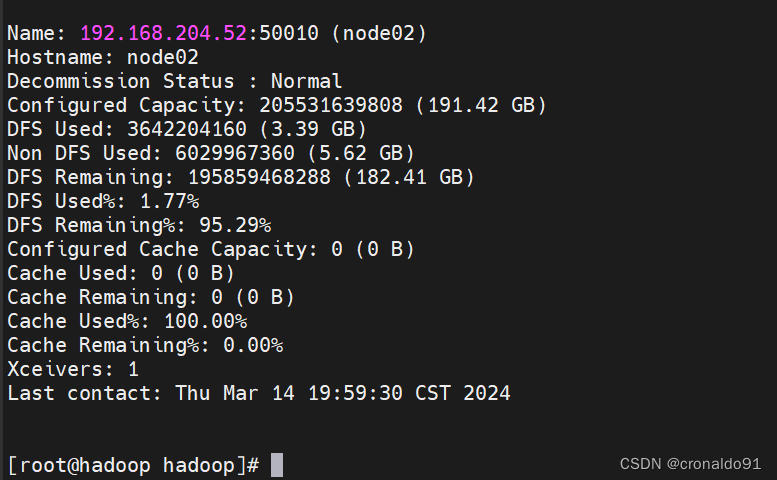

(13) 验证

[root@hadoop hadoop]# ./bin/hdfs dfsadmin -report

Configured Capacity: 616594919424 (574.25 GB)

Present Capacity: 598913466368 (557.78 GB)

DFS Remaining: 589148815360 (548.69 GB)

DFS Used: 9764651008 (9.09 GB)

DFS Used%: 1.63%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

-------------------------------------------------

Live datanodes (3):

Name: 192.168.204.53:50010 (node03)

Hostname: node03

Decommission Status : Normal

Configured Capacity: 205531639808 (191.42 GB)

DFS Used: 3123707904 (2.91 GB)

Non DFS Used: 5622108160 (5.24 GB)

DFS Remaining: 196785823744 (183.27 GB)

DFS Used%: 1.52%

DFS Remaining%: 95.74%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Mar 14 19:59:30 CST 2024

Name: 192.168.204.51:50010 (node01)

Hostname: node01

Decommission Status : Normal

Configured Capacity: 205531639808 (191.42 GB)

DFS Used: 2998738944 (2.79 GB)

Non DFS Used: 6029377536 (5.62 GB)

DFS Remaining: 196503523328 (183.01 GB)

DFS Used%: 1.46%

DFS Remaining%: 95.61%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Mar 14 19:59:30 CST 2024

Name: 192.168.204.52:50010 (node02)

Hostname: node02

Decommission Status : Normal

Configured Capacity: 205531639808 (191.42 GB)

DFS Used: 3642204160 (3.39 GB)

Non DFS Used: 6029967360 (5.62 GB)

DFS Remaining: 195859468288 (182.41 GB)

DFS Used%: 1.77%

DFS Remaining%: 95.29%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Mar 14 19:59:30 CST 2024

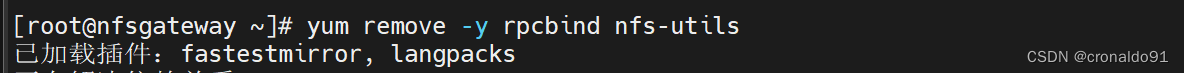

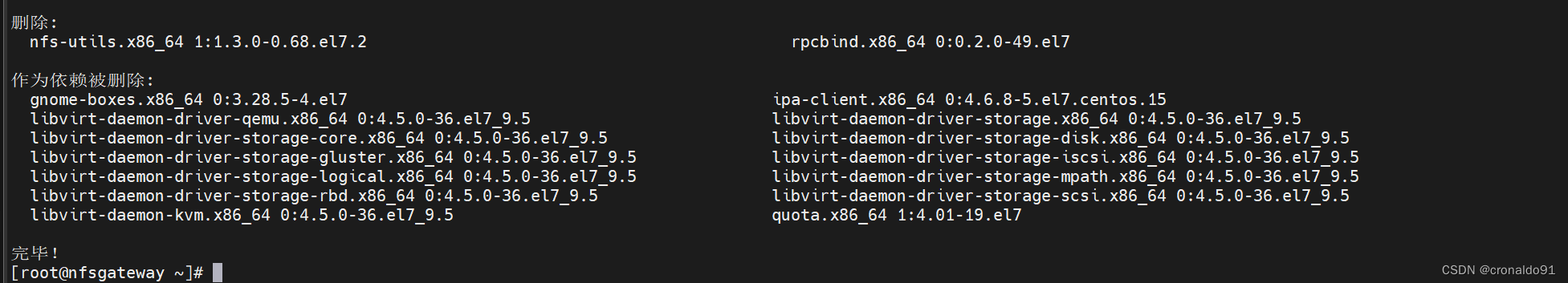

(14)卸载 (nfsgateway节点)

[root@nfsgateway ~]# yum remove -y rpcbind nfs-utils

完成

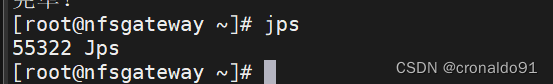

(15)安装java运行环境

[root@nfsgateway ~]# yum install -y java-1.8.0-openjdk-devel.x86_64

查看jps

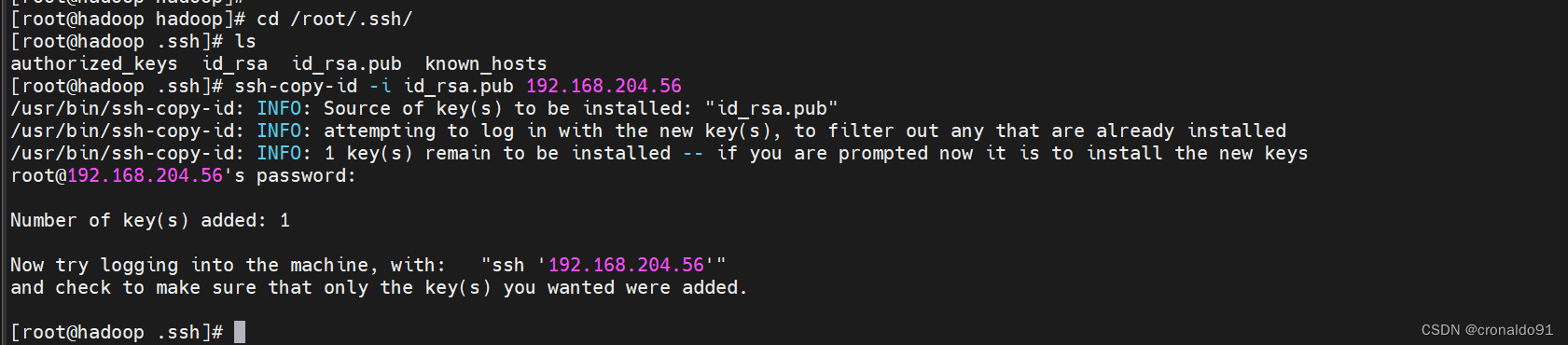

(16)SSH免密认证

[root@hadoop hadoop]# cd /root/.ssh/

[root@hadoop .ssh]# ls

authorized_keys id_rsa id_rsa.pub known_hosts

[root@hadoop .ssh]# ssh-copy-id -i id_rsa.pub 192.168.204.56

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.204.56's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '192.168.204.56'"

and check to make sure that only the key(s) you wanted were added.

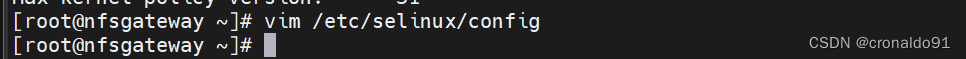

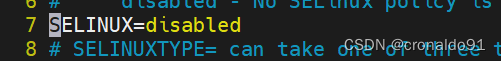

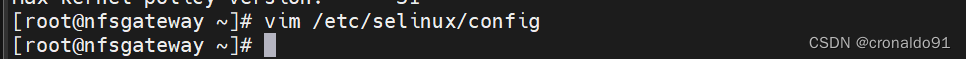

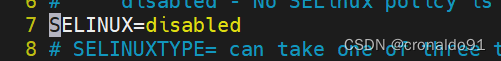

(17)关闭安全机制(需要reboot重启)

[root@nfsgateway ~]# vim /etc/selinux/config

……

SELINUX=disabled

……

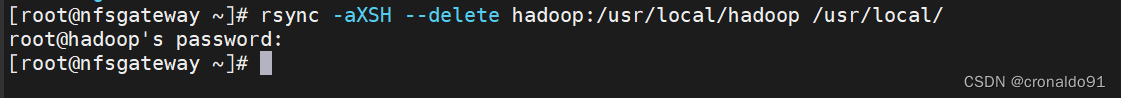

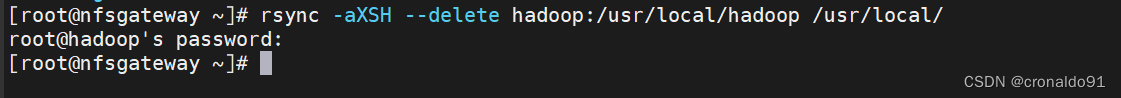

(18)同步hadoop配置

[root@nfsgateway ~]# rsync -aXSH --delete hadoop:/usr/local/hadoop /usr/local/

(19)修改NFS网关配置文件 (nfsgateway节点)

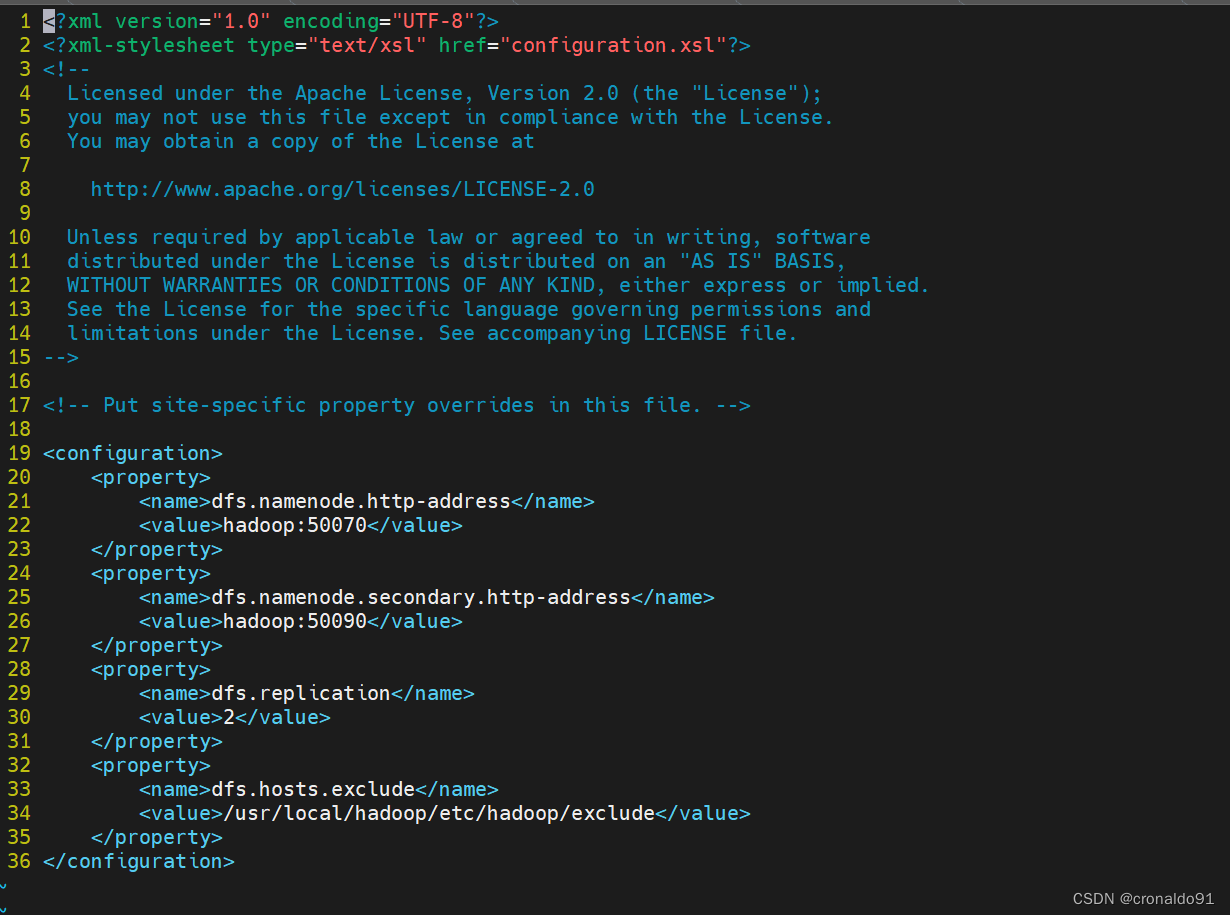

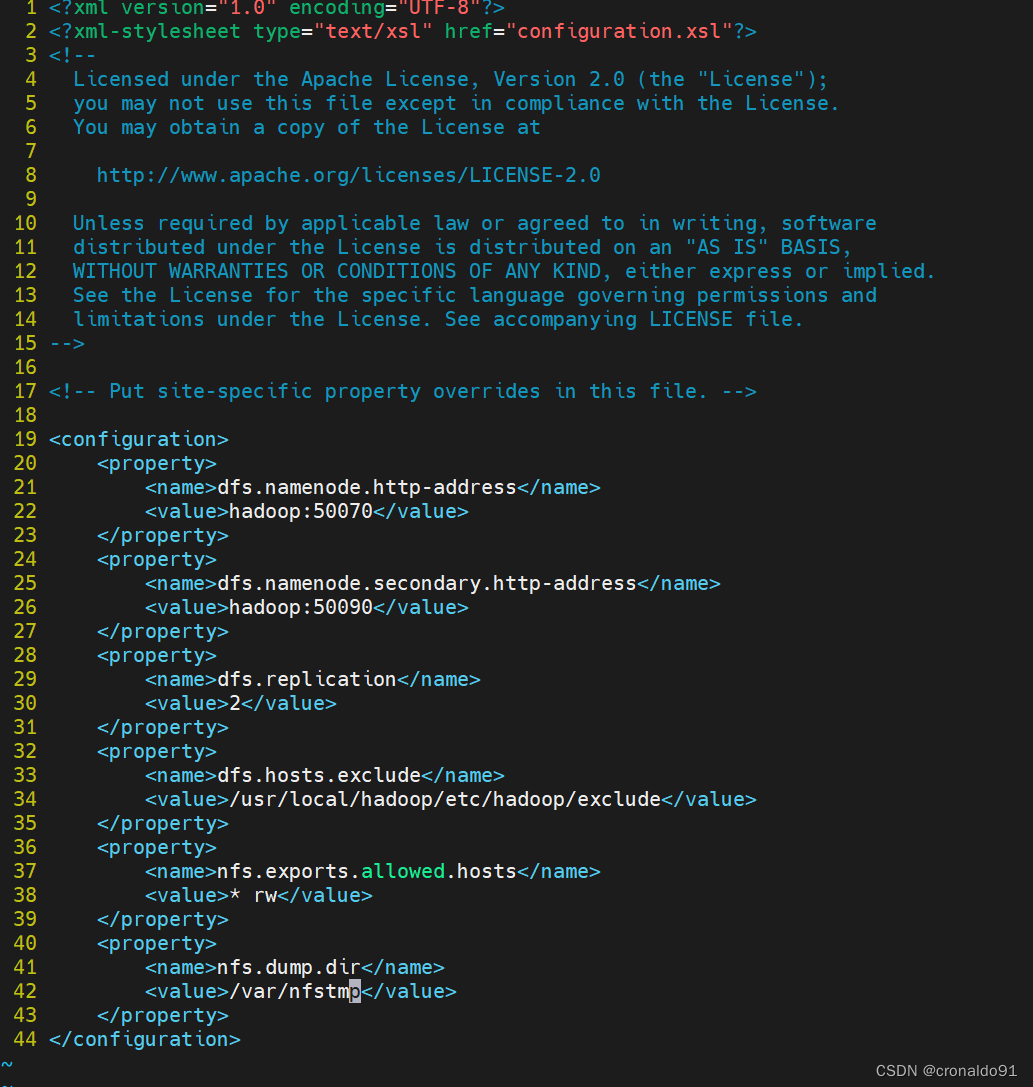

[root@nfsgateway hadoop]# vim hdfs-site.xml

![]()

修改前:

修改后:

<property>

<name>nfs.exports.allowed.hosts</name>

<value>* rw</value>

</property>

<property>

<name>nfs.dump.dir</name>

<value>/var/nfstmp</value>

</property>

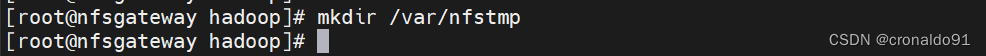

(20)创建转储目录

[root@nfsgateway hadoop]# mkdir /var/nfstmp

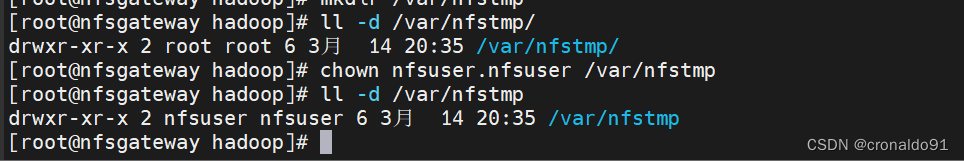

(21)为代理用户授权

[root@nfsgateway hadoop]# chown nfsuser.nfsuser /var/nfstmp

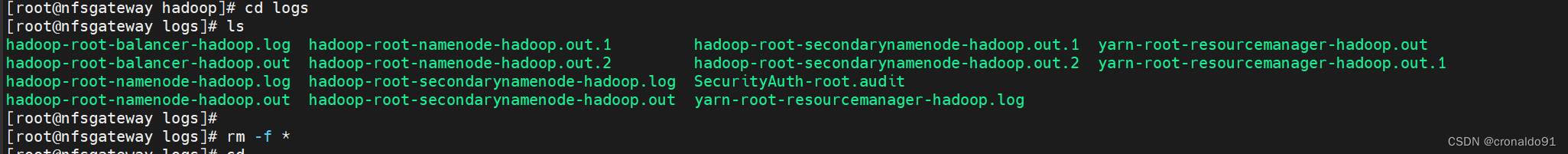

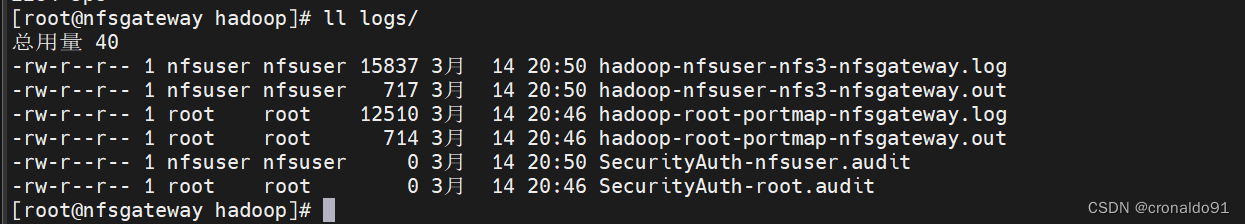

(22)在日志文件夹为代理用户授权

删除

[root@nfsgateway hadoop]# cd logs

[root@nfsgateway logs]# ls

[root@nfsgateway logs]# rm -f *

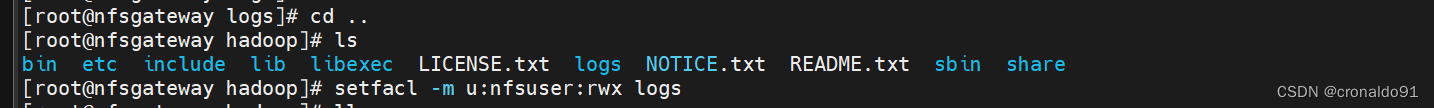

授权

[root@nfsgateway hadoop]# setfacl -m u:nfsuser:rwx logs

查看授权

[root@nfsgateway hadoop]# getfacl logs

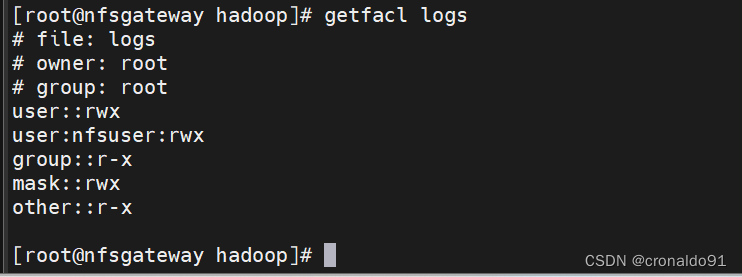

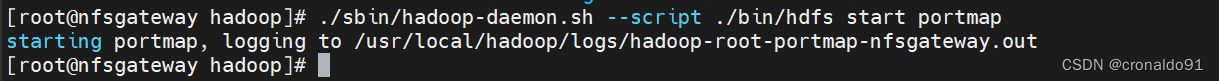

(23)启动portmap

[root@nfsgateway hadoop]# ./sbin/hadoop-daemon.sh --script ./bin/hdfs start portmap

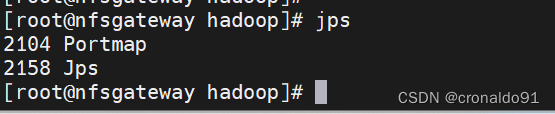

查看jps

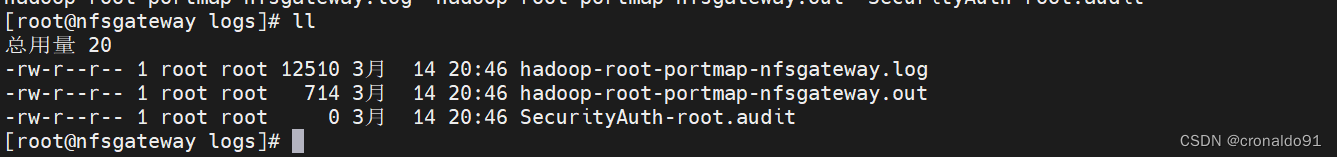

查看日志

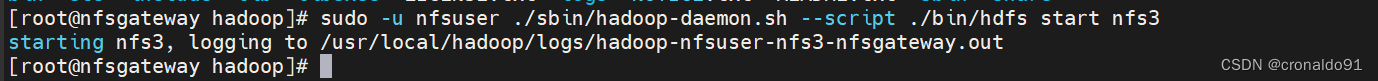

(24)启动 nfs3

[root@nfsgateway hadoop]# sudo -u nfsuser ./sbin/hadoop-daemon.sh --script ./bin/hdfs start nfs3

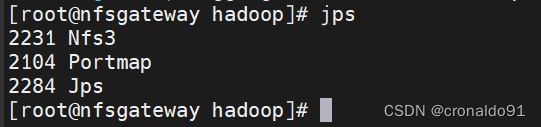

查看jps

查看日志权限

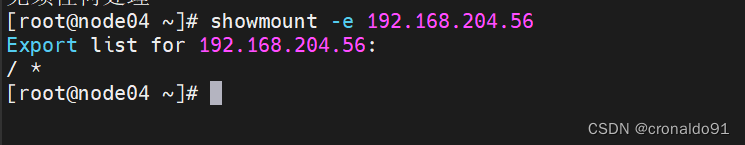

3. NFS客户端挂载HDFS文件系统

(1)安装NFS (node04节点)

[root@node04 ~]# yum install -y nfs-utils

查看

[root@node04 ~]# showmount -e 192.168.204.56

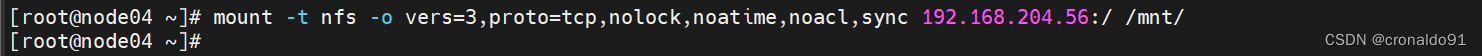

(2)客户端一mount挂载

[root@node04 ~]# mount -t nfs -o vers=3,proto=tcp,nolock,noatime,noacl,sync 192.168.204.56:/ /mnt/

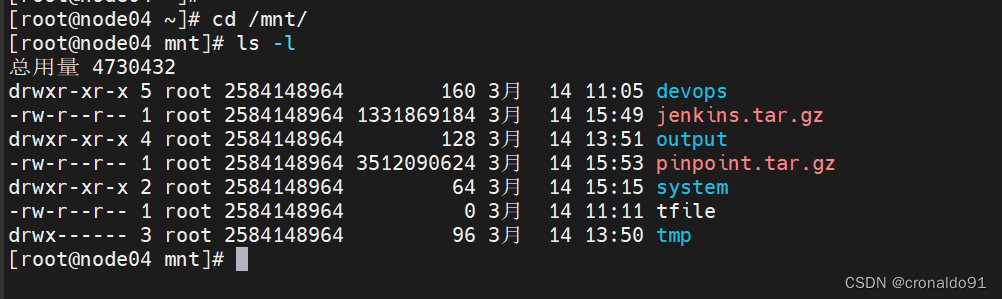

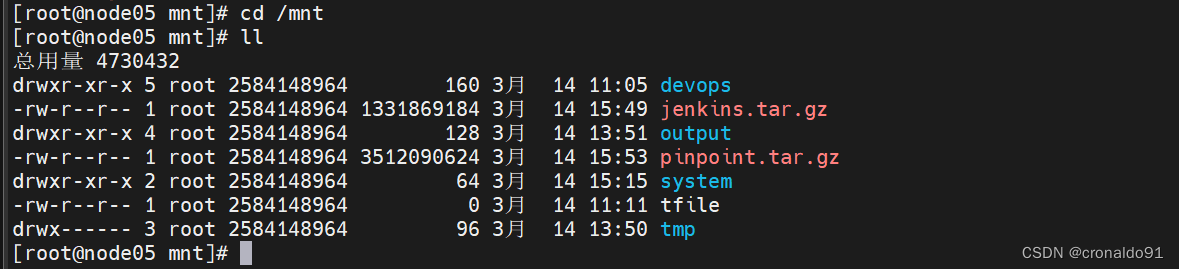

查看

[root@node04 ~]# cd /mnt/

[root@node04 mnt]# ls -l

总用量 4730432

drwxr-xr-x 5 root 2584148964 160 3月 14 11:05 devops

-rw-r--r-- 1 root 2584148964 1331869184 3月 14 15:49 jenkins.tar.gz

drwxr-xr-x 4 root 2584148964 128 3月 14 13:51 output

-rw-r--r-- 1 root 2584148964 3512090624 3月 14 15:53 pinpoint.tar.gz

drwxr-xr-x 2 root 2584148964 64 3月 14 15:15 system

-rw-r--r-- 1 root 2584148964 0 3月 14 11:11 tfile

drwx------ 3 root 2584148964 96 3月 14 13:50 tmp

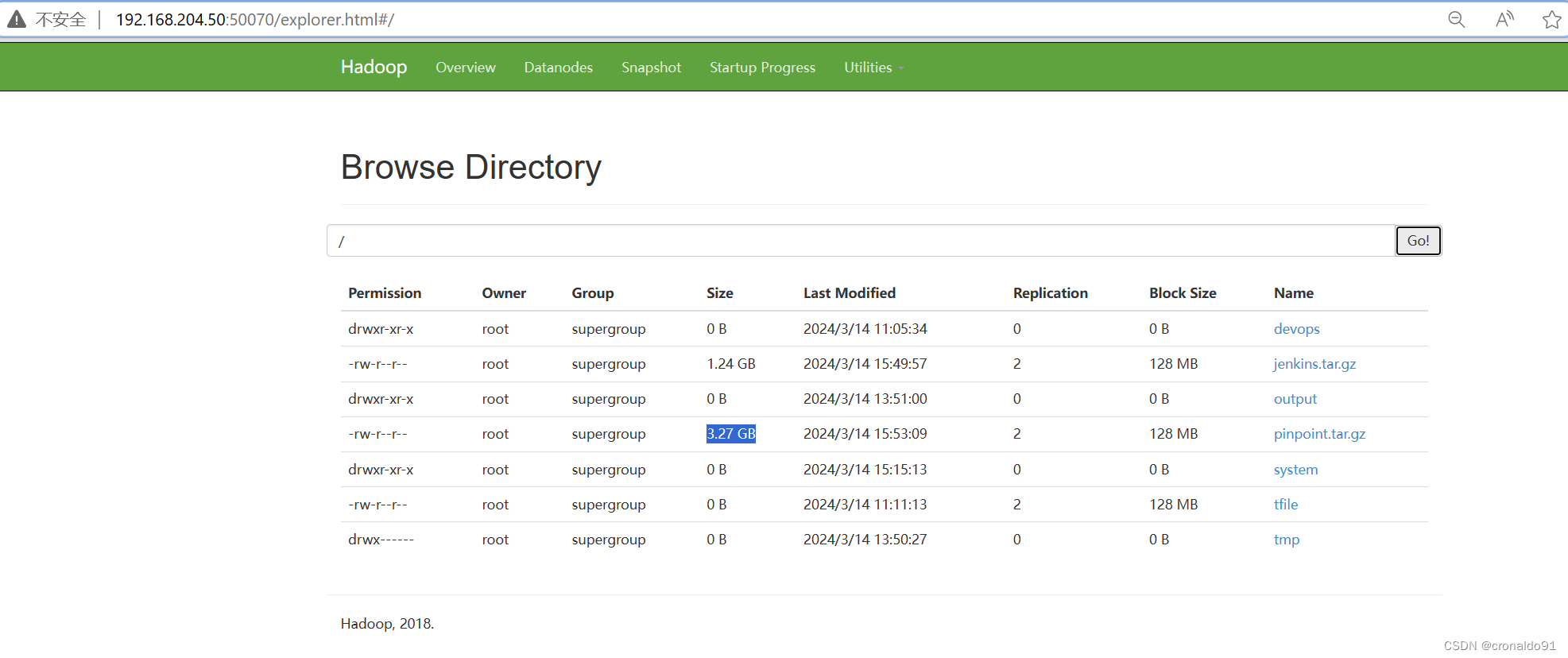

(3)web查看

与NFS客户端内容一致

http://192.168.204.50:50070/

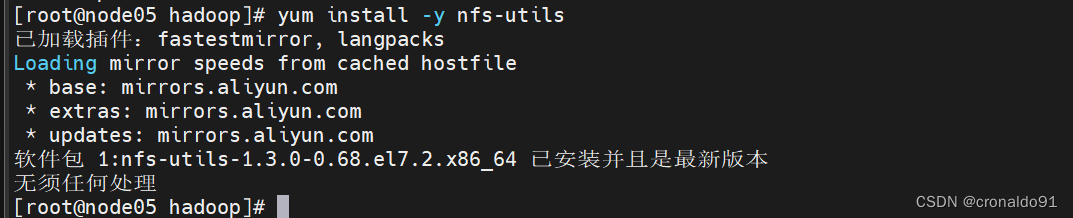

(4)安装NFS (node05节点)

[root@node05 ~]# yum install -y nfs-utils

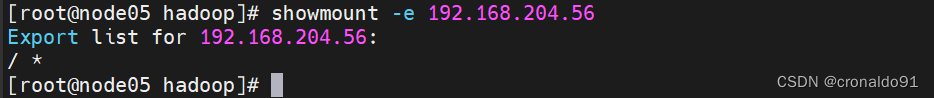

查看

[root@node05 ~]# showmount -e 192.168.204.56

(5)客户端二mount挂载

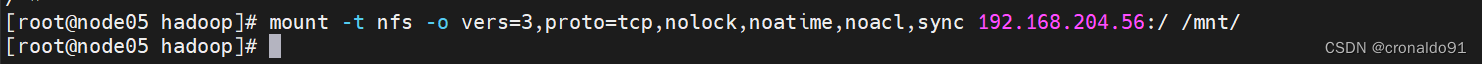

[root@node05 ~]# mount -t nfs -o vers=3,proto=tcp,nolock,noatime,noacl,sync 192.168.204.56:/ /mnt/

查看

[root@node05 mnt]# cd /mnt

[root@node05 mnt]# ll

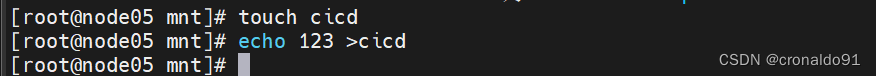

新建文件

[root@node05 mnt]# touch cicd

[root@node05 mnt]# echo 123 >cicd

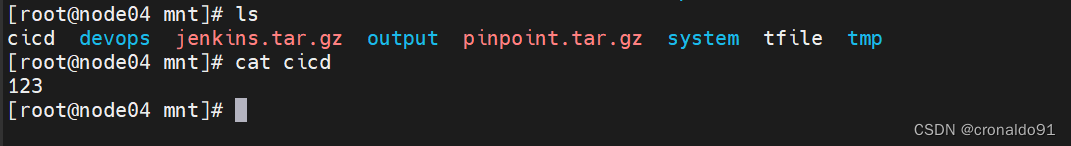

(6)客户端一查看

[root@node04 mnt]# ls

cicd devops jenkins.tar.gz output pinpoint.tar.gz system tfile tmp

[root@node04 mnt]# cat cicd

二、问题

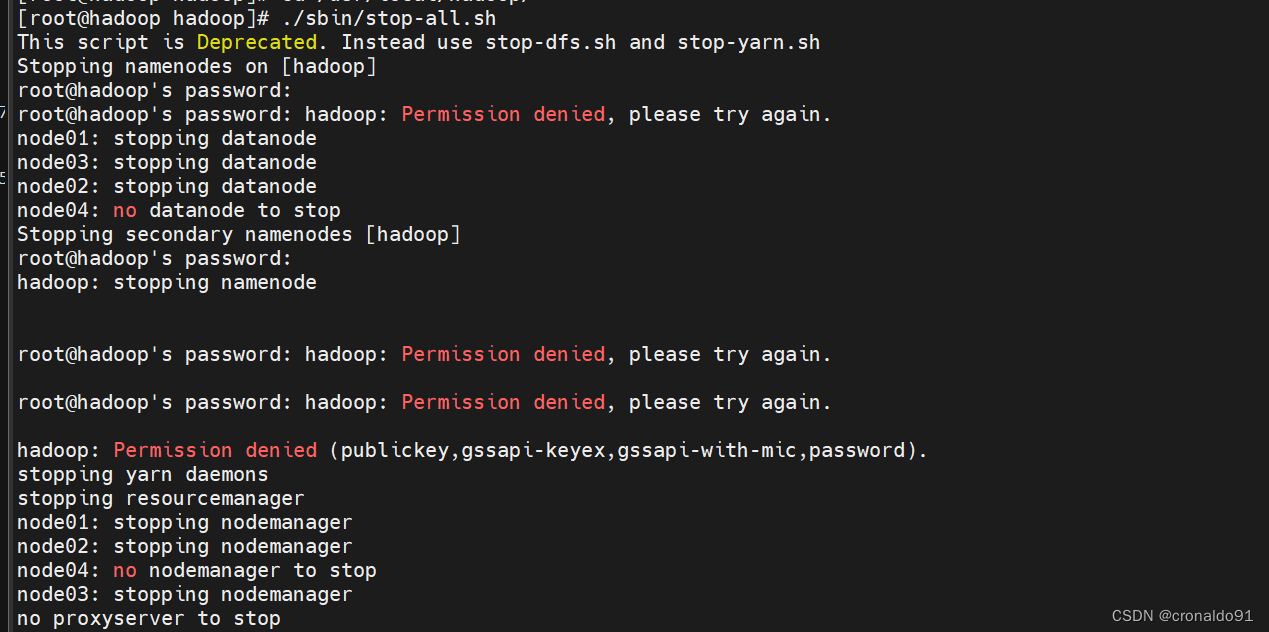

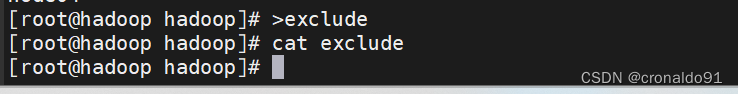

1.关闭服务报错

(1)报错

node04: no datanode to stop

(2)原因分析

配置文件未移除node04节点。

(3)解决方法

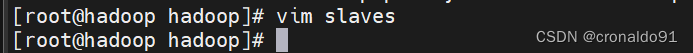

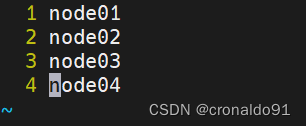

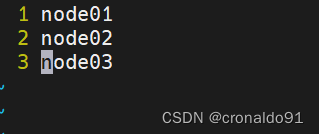

[root@hadoop hadoop]# vim slaves

修改前:

修改后:

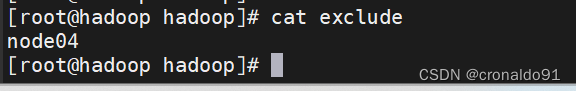

查看排除文件

[root@hadoop hadoop]# cat exclude

清空查看

[root@hadoop hadoop]# >exclude

[root@hadoop hadoop]# cat exclude

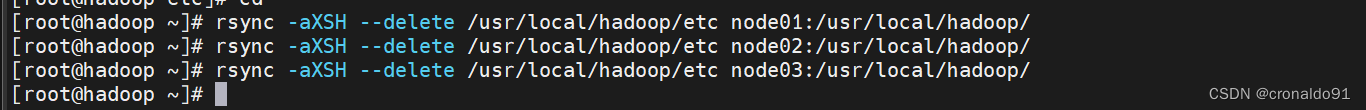

重新同步

[root@hadoop ~]# rsync -aXSH --delete /usr/local/hadoop/etc node01:/usr/local/hadoop/

[root@hadoop ~]# rsync -aXSH --delete /usr/local/hadoop/etc node02:/usr/local/hadoop/

[root@hadoop ~]# rsync -aXSH --delete /usr/local/hadoop/etc node03:/usr/local/hadoop/

成功关闭服务

[root@hadoop hadoop]# ./sbin/stop-all.sh

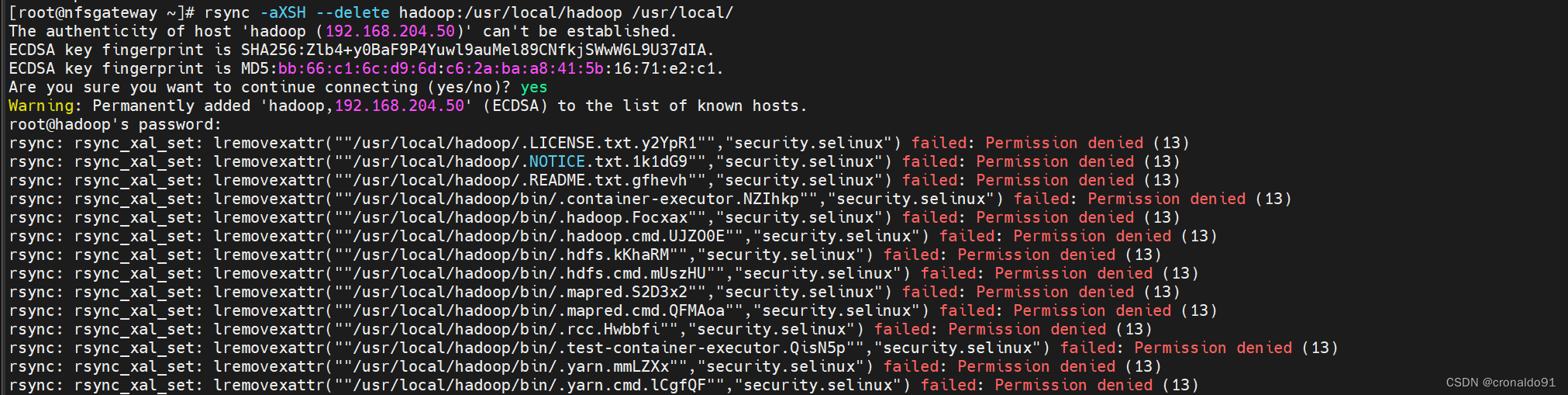

2.rsync 同步报错

(1)报错

(2)原因分析

未关闭安全机制。

(3)解决方法

关闭安全机制(需要reboot重启)

[root@nfsgateway ~]# vim /etc/selinux/config

……

SELINUX=disabled

……

成功同步hadoop配置:

[root@nfsgateway ~]# rsync -aXSH --delete hadoop:/usr/local/hadoop /usr/local/

3. mount挂载有哪些参数

(1)参数

1)v3版本

vers=3

2)仅使用TCP作为传输协议

proto=tcp

3)不支持随机写NLM

nolock

4)禁用access time 的时间更新

noatime

5)禁用acl扩展权限

noacl

6)同步写入避免重排序写入

sync